Archive for the ‘ICN’ tag

Data-oriented, Decentralized, Daring: Opportunities and Research Challenges for an Information-Centric Web

Research and development in ICN has led to different communication patterns such as Sync and API implementations such as CNL. It is now time to think about how to leverage Information-Centric principles for providing better foundations for hypermedia applications in the future web. At NDNComm-2024 I talked about how ICN could possibly help, what could be fruitful future research directions, and why web3 and dweb are not the answer.

Material

Towards a Unified Transport Protocol for In-Network Computing in Support of RPC-based Applications

The emerging term In-Network Computin (INC) [inc] in particular refers applying on-path programmable networking devices (e.g., switches and routers between clients and servers) as an accelerator or function offloader to boost throughput, reduce server load, or improve latency, typically in a well-controlled data center network environment.

Some INC implementations evolved from programmable data plane systems and align with the trend of network programmability at large. In recent year, it has been shown to support many promising applications (e.g., caching, aggregation, and agreement). For example, in distributed machine learning (DML), training nodes produce data (gradients) that needs to be aggregated or reduced -- and the result could be distributed to one or multiple consumers. As another example, the NetClone system [netclone] uses in-network forwarder to replicate RPC invocation messages and to perform more informed forwarding based on observed latencies for accelerating RPC communication.

While it is possible to achieve this kind of operation purely with end-to-end communication between worker nodes, performance can be dramatically improved by offloading both the operation processing and the data dissemination to nodes in the network. These in-network processors are often conceived as semi-transparent performance enhancing on-path elements, i.e., they are not the actual endpoints in transport protocol sessions and would intercept packets with application data and potentially generate new data that they would have to transmit.

In our Internet Draft draft-song-inc-transport-protocol-req-01.txt, we are discussing this problem and are formulating some requirements for the design of future transport protocols in this space.

References

- Collective Communication: Better Network Abstractions for AI

- Computing in the Network – Lessons Learned and New Opportunities

- [I-D.yao-tsvwg-cco-problem-statement-and-usecases] Yao, K., Shiping, X., Li, Y., Huang, H., and D. KUTSCHER, "Collective Communication Optimization: Problem Statement and Use cases", Work in Progress, Internet-Draft, draft-yao-tsvwg-cco-problem-statement-and-usecases-00, 23 October 2023, https://datatracker.ietf.org/doc/html/draft-yao-tsvwg-cco-problem-statement-and-usecases-00.

- [inc] Klenk et al., B., "An In-Network Architecture for Accelerating Shared-Memory Multiprocessor Collectives", ACM/IEEE 47th Annual International Symposium on Computer Architecture (ISCA), 2020, <https:dx.doi.org/10.1109/ISCA45697.2020.00085>

- [netclone] Kim, G., "NetClone: Fast, Scalable, and Dynamic Request Cloning for Microsecond-Scale RPCs", In Proceedings of the ACM SIGCOMM 2023 Conference (ACM SIGCOMM '23). Association for Computing Machinery, New York, NY, USA, 195-207, 2023 https://dl.acm.org/doi/10.1145/3603269.3604820

Cornerstone: Automating Remote NDN Entity Bootstrapping

We published a paper on automated remote bootstrapping in Named Data Networking (NDN):

Tianyuan Yu, Xinyu Ma, Hongcheng Xie, Dirk Kutscher, Lixia Zhang; Cornerstone: Automating Remote NDN Entity Bootstrapping; Asian Internet Engineering Conference (AINTEC) 2023; December 2023; doi/10.1145/3630590.3630598

Abstract

To secure all communications, Named Data Networking (NDN) requires that each entity joining an NDN network go through a bootstrapping process first, to obtain its initial security credentials. Several solutions have been developed to bootstrap IoT devices in localized environments, where the devices being bootstrapped are within the physical reach of their bootstrapper. However, distributed applications need to bootstrap remote users and devices into an NDN-based system over insecure Internet connectivity. In this work, we take Hydra, a federated distributed file storage system made of servers contributed by multiple participating organizations, as a use case to drive the design and development of a remote bootstrapping solution, dubbed Cornerstone. We describe the design of Cornerstone, evaluate its effectiveness, and discuss the lessons learned from this process.

Rethinking LoRa for the IoT: An Information-centric Approach

We just published our IEEE Communications Magazine article on Rethinking LoRa for the IoT with an Open Access license.

LoraWAN and the Internet

Internet of Things (IoT) interconnects numerous sensors and actuators either locally or across the global Internet. From an application perspective, IoT systems are inherently data-oriented, their purpose is often to provide access to named sensor data and control interfaces. From a device and communication perspective, things in the IoT are resource-constrained devices that are commonly powered by a small battery and communicate wirelessly.

LoRaWAN systems today integrate the LoRa physical layer with the LoRaWAN MAC layer and corresponding infrastructure support. Among the IoT radio technologies, LoRa is a versatile and popular candidate since it provides a physical layer that allows for data transmission over multiple kilometers with minimal energy consumption. At the same time, the high LoRa receiver sensitivity enables packet reception in noisy environments, which makes it attractive for industrial deployments. On the downside, LoRa achieves only low data rates requiring long on-air times, and significantly higher latencies compared to radios that are typically used for Internet access.

LoraWAN MAC Layer

The LoRaWAN MAC layer and network architecture that is often used in LoRa deployments, thus provide a vertically integrated sensor data delivery service on top of the LoRa PHY that implements media access and end-to-end network connectivity. Unfortunately, LoRaWAN cannot utilize the LoRa PHY to its best potential with respect to throughput and robustness and is mostly used for upstream-only communication. It is not intended to directly interconnect with the Internet, but relies on a bespoke middlebox architecture consisting of gateways and network servers. Overall LoRaWAN has the following main problems, as depicted in the figure below.

- Centralization around a network server prevents data sharing between users, across distributed applications, and requires permanent infrastructure backhaul of the wireless access network.

- Uplink-oriented and uncoordinated communication leads to wireless interference. Downlink traffic is rarely available in practice and suffers from scalability issues.

Data-centric Delay-tolerant End-to-End Communication over the Internet

This paper presents an overview about recent advancements to enable data-centric, long-range IoT communication based on LoRa. The proposed network system aims for delay-tolerant, bi-directional communication in the presence of vastly longer latencies and lower bandwidth compared to regular Internet systems – without relying on vertically integrated middlebox-based architectures. The resulting system resolves current LoRaWAN performance issues using two main building blocks: a new network layer based on Information-centric Networking (ICN) and a new MAC layer.

Originally designed for non-constrained wired networks to abandon the end-to-end paradigm and access data only by names instead of IP endpoints, ICN migrated to the constrained wireless IoT over the past years. ICN still lacks a lower layer definition but provides mechanisms that are beneficial for the challenging LoRa domain: Decoupling of content from endpoints separates data access from physical infrastructure. Inherent content caching and replication potentially reduce link load, thus, wireless interference, and it preserves battery resources. The ICN-LoRa system presented in this paper bases its design on IEEE 802.15.4 DSME which was originally designed for low-power personal area networks. This MAC handles media access reliably using time- and frequency multiplexing, and enables reliable bi-directional communication.

Synergizing the advantages of LoRa, DSME, and ICN enables delay-tolerant, bi-directional LoRa communication, wich enhances many existing IoT applications. Wide area data retrieval and control as for solar power stations or smart street lighting systems are facilitated by the new MAC and its ICN integration. High voltage overhead line monitoring connecting voltage sensors and transformers relies on high data reliability, even under intermittent connectivity or loss. ICN achieves this, employing content caching and replication. Traveling container monitoring (RFC 7744) is challenging due to mobility and interference from metallic surfaces, where LoRa surpasses other radio systems. Decoupling content from its location for mobile containers and an adaptation to long producer delays are naturally contributed by LoRa-ICN.

Results

In our paper, we provide the essential technical background and challenges to design a LoRa-ICN system. We identify the key performance potentials of five protocol variants based on an implementation in RIOT OS and experiments on off-the-shelf IoT devices.

LoRa is an attractive radio technology for the IoT, providing a long wireless transmission range for battery-driven devices. Its versatility is hindered, though, by common deployments with LoRaWAN. We re-visited LoRa in the IoT to provide a serverless, data-oriented communication service. We presented the design of a new media access and network layer that leverages 802.15.4 DSME and Information-centric Networking to allow for reliable LoRa transmissions. To scale to a global Internet (of Things), LoRa-ICN facilitates ubiquitous connectivity of constrained nodes and robust bi-directional communication in the presence of power-saving regimes and high loss rates.

We showed that vastly higher latencies in low-power wireless domains can be addressed by extending the default ICN node behavior at the network edge. Two protocol extensions enable ICN-style data transport between resource-constrained LoRa nodes and a domain-agnostic application on the ICN Internet. The core idea is not limited to LoRa but caters to various delay-prone scenarios. Our experiments based on common IoT hardware and software showed significant performance improvements and further optimization potential compared to Vanilla ICN.

The new LoRa-ICN system paves the way for more versatile LoRa deployments in the IoT that serve additional use cases, mixed sensor-actor topologies, or firmware updates utilizing beacon overloading.

References

This article

- P. Kietzmann, J. Alamos, D. Kutscher, T. C. Schmidt and M. Wählisch, Rethinking LoRa for the IoT: An Information-centric Approach in IEEE Communications Magazine, doi: 10.1109/MCOM.001.2300379.

Reflexive forwarding

The ICN communication mechanisms this work is based on.

- Reflexive Forwarding in NDN

- Oran, D. R. and D. Kutscher; Reflexive Forwarding for CCNx and NDN Protocols; Work in Progress; Internet-Draft draft-oran-icnrg-reflexive-forwarding-05; 26 March 2023

In-depth publications this work is based on

- Peter Kietzmann, José Alamos, Dirk Kutscher, Thomas C. Schmidt, and Matthias Wählisch. 2022. Delay-tolerant ICN and its application to LoRa. In Proceedings of the 9th ACM Conference on Information-Centric Networking (ICN '22). Association for Computing Machinery, New York, NY, USA, 125–136. https://doi.org/10.1145/3517212.3558081

- P. Kietzmann, J. Alamos, D. Kutscher, T. C. Schmidt and M. Wählisch, Long-Range ICN for the IoT: Exploring a LoRa System Design, 2022 IFIP Networking Conference (IFIP Networking), Catania, Italy, 2022, pp. 1-9, doi: 10.23919/IFIPNetworking55013.2022.9829792. https://ieeexplore.ieee.org/document/9829792

- José Álamos, Peter Kietzmann, Thomas C. Schmidt, and Matthias Wählisch. 2022. DSME-LoRa: Seamless Long-range Communication between Arbitrary Nodes in the Constrained IoT. ACM Trans. Sen. Netw. 18, 4, Article 69 (November 2022), 43 pages. https://doi.org/10.1145/3552432

ICNRG @ IETF-118

We have posted the agenda our ICNRG meeting at IETF-118:

Drafts

- https://datatracker.ietf.org/doc/draft-irtf-icnrg-flic/

- https://datatracker.ietf.org/doc/draft-yao-tsvwg-cco-problem-statement-and-usecases/00/

- https://datatracker.ietf.org/doc/draft-yao-tsvwg-cco-requirement-and-analysis/00/

- https://datatracker.ietf.org/doc/draft-li-icnrg-damc/

Logistics

ICNRG Meeting at IETF-118 – 2023-11-07, 08:30 to 10:30 UTC

Collective Communication: Better Network Abstractions for AI

We have submitted two new Internet Drafts on Collective Communication:

-

Kehan Yao , Xu Shiping , Yizhou Li , Hongyi Huang , Dirk Kutscher; Collective Communication Optimization: Problem Statement and Use cases; Internet Draft draft-yao-tsvwg-cco-problem-statement-and-usecases-00; work in progress; October 2023

-

Kehan Yao , Xu Shiping , Yizhou Li , Hongyi Huang , Dirk Kutscher; Collective Communication Optimization: Requirement and Analysis; Internet Draft draft-yao-tsvwg-cco-requirement-and-analysis-00; work in progress; October 2023

Collective Communication refers to communication between a group of processes in distributed computing contexts, for example involving interaction types such as broadcast, reduce, all-reduce. This data-oriented communication model is employed by distributed machine learning and other data processing systems, such as stream processing. Current Internet network and transport protocols (and corresponding transport layer security) make it difficult to support these interactions in the network, e.g., for aggregating data on topologically optimal nodes for performance enhancements. These two drafts discuss use cases, problems, and initial ideas for requirements for future system and protocol design for Collective Communication. They will be discussed at IETF-118.

Network Abstractions for Continuous Innovation

In a joint panel at ACM ICN-2023 and IEEE ICNP-2023 in Reykjavik, Ken Calvert, Jim Kurose, Lixia Zhang, and myself discussed future network abstractions. The panel was moderated by Dave Oran. This was one of the more interesting and interactive panel sessions I participated in, so I am providing a summary here.

Since the Internet's initial rollout ~40 years ago, not only its global connectivity has brought fundamental changes to society and daily life, but its protocol suite and implementations have also gone through many iterations of changes, with SDN, NFV, and programmability among other changes over the last decade. This panel looks into next decade of network research by asking a set of questions regarding where lies the future direction to enable continued innovations.

Opportunities and Challenges for Future Network Innovations

Lixia Zhang: Rethinking Internet Architecture Fundamentals

Lixia Zhang (UCLA), quoting Einstein, said that the formulation of the problem is often more essential than the solution and pointed at the complexities of today's protocols stacks that are apparently needed to achieve desired functionality. For example, Lixia mentioned RFC 9298 on proxying UDP in HTTP, specifically on tunneling UDP to a server acting as a UDP-specific proxy over HTTP. UDP over IP was once conceived as a minial message-oriented communication service that was intended for DNS and interactive real-time communication. Due to its push-based communication model, it can be used with minimal effort for useful but also harmful application, including large-scale DDOS attacks. Proxing UDP over HTTP addresses this and other concerns, by providing a secure channel to a server in a web context, so that the server can authorize tunnel endpoints, and so that the UDP communication is congestion controlled by the underlying transport protocol (TCP or QUIC). This specification can be seen as a work-around: sending unsolicted (and un-authenticated) messages over the Internet is a major problem in today's Internet. There is no general approach for authenticating such messages and no concept for trust in peer identities. Instead of analyzing the root cause of such problems, the Internet communities (and the dominant players in that space) prefer to come up with (highly inefficient) workarounds.

This problem was discussed more generally by Oliver Spatscheck of AT&T Labs in his 2013 article titled Layers of Success, where he discussed the (actually deployed) excessive layering in production networks, for example mobile communication networks, where regular Internet traffic is routinely tunneled over GTP/UDP/IP/MPLS:

The main issue with layering is that layers hide information from each other. We could see this as a benefit, because it reduces the complexities involved in adding more layers, thus reducing the cost of introducing more services. However, hiding information can lead to complex and dynamic layer interactions that hamper the end-to-end system’s reliability and are extremely difficult if not impossible to debug and operate. So, much of the savings achieved when introducing new services is being spent operating them reliably.

According to Lixia, the excessive layering stems from more fundamental problems with today's network architecture, notably the lack of identity and trust in the core Internet protocols and the lack of functionality in the forwarding system – leading to significant problems today as exemplied by recent DDoS attacks. Quoting Einstein again, she said that we cannot solve problems by using the same kind of thinking we used when we created them, calling for a more fundamental redesign based on information-centric networking principles.

Ken Calvert: Domain-specific Networking

Ken Calvert (University of Kentucky) provided a retrospective of networking research and looked at selected papers published at the first IEEE ICNP conference in 1993. According to Ken, the dominant theme at that time was How to design, build, and analyze protocols, for example as discussed in his 1993 ICNP paper titled Beyond layering: modularity considerations for protocol architectures.

Ken offered a set of challenges and opportunities for future networking research, such as:

- Domain-specific networking à la Ex uno pluria, a 2018 CCR editorial discussing:

- infrastructure ossification;

- lack of service innovation; and

- a fragmentation into "ManyNets" that could re-create a service-infrastructure innovation cycle.

- Incentives and "money flow"

- Can we escape from the advertising-driven Internet app ecosystem? Should we?

- Wide-area multicast (many-many) service

- Building block for building distributed applications?

- Inter-AS trust relationships

- Ossification of the Inter-AS interface – cannot be solved by a protocol!

- Impact ⇐ Applications ⇐ Business opportunities ($)

- What user problem cannot be solved today?

- "The core challenge of CS ... is a conceptual one, viz., what (abstract) mechanisms we can conceive without getting lost in the complexities of our own making." - Dijkstra

For his vision for networking in 30 years, Ken suggested that:

- IP addresses will still be in use

- but visible only at interfaces between different owners' infrastructures

- Network infrastructure might consist of access ASes + separate core networks operated by the "Big Five".

- Users might communicate via direct brain interfaces with AI systems.

Dirk Kutscher: Principled Approach to Network Programmability

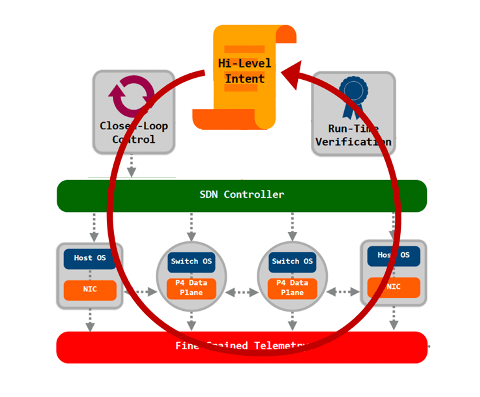

I offered the perspective of introducing a principled approach to programmability that could provide better programmability (for humans and AI), based on more powerful network abstractions.

Previous work in SDN with protocols such as OpenFlow and dataplane programming languages such as P4 have only scratched the surface of what could be possible. OpenFlow was a great first idea, but it was fundamentally constrained by the IP and Ethernet-based abstractions that were built into it. It can be used for programming some applications in that domain, such as firewalls, virtual networking etc., but the idea of continuous innovation has not really materialized.

Similarly, P4 was advertized as an enabler for new levels of dataplane programmability, but even simple systems such as NetCache have to go to quite some extend to achieve minimal functionality for a proof-of-concept. Another P4 problem that is often reported is the hardware heterogeneity so that universal programmability is not really possible. In my opinion, this raises some questions with respect to applicability of current dataplane programming for in-network computing. A good example of a more productive application of P4 is the recent SIGCOMM paper on NetClone that describes as fast, scalable, and dynamic request cloning for microsecond-Scale RPCs. Here P4 is used as an accelerator for programming relatively simple functionality (protocol parsing, forwarding).

This may not be enough for future universal programmability though. During the panel discussion, I drew an analogy to computer programming language. We are not seeing the first programming language and IDEs that are designed from the ground up for better AI. What would that mean for network programmability? What abstractions and APIs would we need?

In my opinion, we would have to take a step back and think about the intended functionality and the required observability for future (automated) network programmability that is really protocol-independent. This would then entail more work on:

- the fundamental forwarding service (informed by hardware constraints);

- the telemetry approach;

- suitable protocol semantics;

- APIs for applications and management; and

- new network emulation & debugging approach (a long the lines of "network digital twin" concepts).

Overall, I am expecting new exiciting research in the direction of principled approaches to network programmability.

Jim Kurose: Open Research Infrastructures and Softwarization

Jim reminded us that the key reason Internet research flourished was the availability of open infrastructure with no incumbent providers initially. The infrastructure was owned by researchers, labs, and universities and allowed for a lot of experimentation.

This open infrastructure has recently been challenged by ossification with the rise of production ISP services at scale, and the emergence of closed ISPs, cellular carriers, hyperscalers operating large portion of the network.

As an example for emerging environments that offer interesting opportunities for experiments and new developments, Jim mentioned 4G/5G private networks, i.e., licensed spectrum created closed ecosystems – but open to researchers, creating opportunities for:

- innovation in private 5G networks such as Citizens Broadband Radio Service (CBRS) that could enables innovation in open, deployed systems and a democratization of 5G+ networks and edge applications;

- testbeds, such as Platforms for Advanced Wireless Research (PAWR); and

- the integration of WiFi, 5G as link-layer edge RANs.

Jim was also suggesting further opportunities in softwarization and programmability, such as (formal) methods for logical correctness and configuration management, as well as programmability to add services beyond the "minimal viable service", such as closed loop automatic control and management.

Finally Jim also mentioned opportunities in emerging new networks such as LEOs, IoT and home networks.

The Metaverse as an Information-Centric Network

This is an introduction to our paper:

- Dirk Kutscher, Jeff Burke, Giuseppe Fioccola, Paulo Mendes; Statement: The Metaverse as an Information-Centric Network; 10th ACM Conference on Information-Centric Networking (ACM ICN '23); October 9 — 10, 2023, Reykjavik, Iceland; https://dl.acm.org/doi/10.1145/3623565.3623761; pre-print available at http://arxiv.org/abs/2309.09147

The Web Today

The Web today has a specific technical definition: it includes presentation layer technologies, protocols, agreed-upon ways of achieving certain semantics such as Representational State Transfer (REST), and security infrastructure. However, from a user perspective, it can be viewed as a universe of consistently navigable content and (occasionally) interoperable services. The user experience and architectural underpinnings have evolved in parallel and have influenced each other: for many end users, the Web and the network are synonymous. Rather than building up "Metaverse" as an application domain based on IP, we aim to explore "the Metaverse" as strongly intertwined with ICN, just as the modern concept of the Web and its technology stack are inseparable for a broad set of applications.

As a placeholder name for a range of new technologies and experiences, "the Metaverse" is even less well-defined than the Web. We adopt the commonly used concept of a shared, interoperable, and persistent XR. Some descriptions and early prototypes for social AR/VR systems suggest leveraging existing Internet and Web protocols to provide Metaverse services, without addressing the technical complexity and centralization of control required to provide the underlying cloud service infrastructure.

Metaverse as an Information-Centric Concept

Here, we do not take as given current designs and deployment models that consider the Metaverse as an overlay application with corresponding infrastructure dependencies, as this exacerbates the current gaps (and the resulting costs and technical complexity) between distributed applications and the underlying network architecture. Instead, we assume a fundamentally information/centric system in which most applications participate in granular 3D content exchange, context-aware integration with the physical world, and other Metaverse-relevant services.

"The Metaverse" is an information-centric concept that likely will become synonymous with the network itself. We argue that reciprocal design of the network and applications will open new opportunities for the deployment of Metaverse-suggestive experiences even today.

Experientially, this Metaverse is an extension of the Web into immersive XR modalities that are often aligned with physical space, as in augmented reality (AR). We conceive the Metaverse not only as a shared XR environment, but the next generation of the web, extending into 3D interaction/immersion and optionally overlaid on physical spaces. Instead of rendering data objects into a 2D page (within a tab within a window) on a device, we envision such objects being rendered into a shared 3D space, interacting among each other and with end users.

Architecturally, leveraging ICN concepts provides support for decentralized publishing, content interoperability and co-existence, based on general building blocks and not within separated application silos as today's initial prototypes. We claim that such properties are required to achieve the generally circulated visions of Metaverse systems, but are not achievable today because of the host- and connection-centric way in which the web operates and is presented to users in browsers.

ICN Capabilities

We point out four ICN capabilities critical to Metaverse concepts:

- scalable and robust multi-destination communication, overcoming IP multicast challenges, such as inter-domain routing, scalability, and routing communication overhead;

- leveraging wireless broadcast to support shared local views and low-latency interactivity without application-awareness in edge routers;

- privacy, selective attention, content filtering, and autonomous interactions, as well as ownership and control on the publishing side; and

- supporting in-network processing for objects replication and transformation.

Interactive Holographic Communication

For example, imagine interactive holographic communication consisting of participants' 3D video, spatial audio, and shared 3D documents. In ICN, such an application can represent virtual content as secure data objects and share them efficiently in a larger group of peers, fetching only the data necessary to reconstruct a suitable representation while being aware of the constraints of user devices and access networks.

Furthermore, while experiencing 3D objects shared by the group, each participant may also interact in the same XR environment with personal services such as wayfinding, messaging, and Internet of Things (IoT) device status. Interactions between private and shared 3D objects would be simplified if these objects use similar conventions but with different security. This concept is semantically well-aligned with ICN properties, particularly for security, as it revolves around object-level data exchange rather than hosts or channels. Integration and interoperability within a shared XR environment, without centralization, is challenging if one has to negotiate not only data interactions but also the underlying service connections and security relationship using host-centric paradigms. It also exacerbates the impact of intermittent connectivity on interactivity when the global network is required for functions such as rendezvous -- that are handled locally in ICN.

Creating Shared Environments

As a second example, consider creating a shared environment -- e.g., to pre-visualize engineering models of an aircraft – from a collection of collaboratively edited 3D documents. Imagine component documents interacting in a simulation. Documents can be modularized, linked, and overlaid in a web-like manner. Today, such cross-platform interoperability and visualization without centralized hubs is impractical, and it is difficult to create secure, granular data flows required for interaction between co-existing 3D elements to "bring them to life" in a virtual world. In an ICN approach, such modules could be independently authored and published, shared between applications, becoming building blocks of a richer, interacting system of user- and machine-generated content.

We introduce some technical challenges and research direction in our paper (link below).

Further Reading

The Metaverse as an Information-Centric Network

- Dirk Kutscher, Jeff Burke, Giuseppe Fioccola, Paulo Mendes; Statement: The Metaverse as an Information-Centric Network; 10th ACM Conference on Information-Centric Networking (ACM ICN '23); October 9 — 10, 2023, Reykjavik, Iceland; https://doi.org/10.1145/3623565.3623712; pre-print available at http://arxiv.org/abs/2309.09147

- Giuseppe Fioccola , Paulo Mendes , Jeff Burke , Dirk Kutscher;

Information-Centric Metaverse; Internet Draft draft-fmbk-icnrg-metaverse-01; Work in Progress; July 2023 - Jeff Burke, Lixia Zhang, Dirk Kutscher; Named Data Microverse project

- Dirk Kutscher, Jeff Burke, Paulo Mendes, Michelle Munson, Todd Hodes; Named Data Metaverse Panel at NDNComm-2023

- Dirk Kutscher, Lixia Zhang, Jeff Burke, Dave Oran; IEEE MetaCom Workshop on Decentralized, Data-Oriented Networking for the Metaverse (DORM); IEEE Metacom-2023

- Dirk Kutscher, Dave Oran; Statement: RESTful Information-Centric Networking; ACM Conference on Information-Centric Networking (ICN 2022); Osaka, Japan; September 2022; https://dirk-kutscher.info/publications/icn-rest/

References

- Cheng, R., Wu, N., Varvello, M., Chen, S., and Han, B; Are we ready for metaverse?: a measurement study of social virtual reality platforms; In Proceedings of the 22nd ACM Internet Measurement Conference, IMC 2022, Nice, France; October 25-27, 2022 (2022); https://dl.acm.org/doi/10.1145/3517745.3561417

- Erickson, L; Interoperability in the immersive web – part 1; https://hubs.mozilla.com/labs/interoperability-in-the-immersive-web/, Feb 2023.

- Fielding, R. T.; Architectural Styles and the Design of Network-based Software Architectures; PhD thesis, University of California, Irvine, 2000. http://www.ics.uci.edu/fielding/pubs/dissertation/top.htm

- Gruessing, J., and Dawkins, S; Media over quic - use cases and requirements for media transport protocol design; Internet-Draft https://datatracker.ietf.org/doc/draft-ietf-moq-requirements/, version 01; IETF Secretariat, July 2023.

- Jennings, C. F., Nandakumar, S., and Huitema, C. Quicr – media delivery protocol over quic. Internet-Draft https://datatracker.ietf.org/doc/draft-jennings-moq-quicr-proto/, version 01, IETF Secretariat, January 2023.

- LAMINA1. Decentralized system services for the open metaverse; https://uploads-ssl.webflow.com/63fe332d7b9ae4159d741e55/64499d8f08bd5bdd1fe6bce1_MaaS_Whitepaper_v1.0.pdf

- Moll, P., Patil, V., Wang, L., and Zhang, L.; The evolution of distributed dataset synchronization solutions in NDN: sok; In 9th ACM Conference on Information-Centric Networking; ICN 2022; Osaka Japan; September 19-21, 2022 (2022); https://dl.acm.org/doi/10.1145/3517212.3558092

- Moore, M. B. T.; How we ruined the internet; CoRR abs/2306.01101 (2023); https://arxiv.org/abs/2306.01101

- NVIDIA. What is universal scene description; https://developer.nvidia.com/usd.

- Oran, D. R.; Considerations in the Development of a QoS Architecture for CCNx-Like Information-Centric Networking Protocols; RFC 9064; June 2021; https://datatracker.ietf.org/doc/rfc9064/

- Patil, V., Desai, H., and Zhang, L; Kua: A distributed object store over named data networking; In Conference on Information-Centric Networking, ICN 2022, Osaka Japan, September 19-21, 2022 (2022); https://dl.acm.org/doi/10.1145/3517212.3558083

- Radoff, J.; Metaverse interoperability, part 1: Challenges. https://medium.com/building-the-metaverse/metaverse-interoperabilitypart-1-challenges-716455ca439e, Apr 2022.

- Khronos Group; glTF runtime 3d asset delivery; https://www.khronos.org/gltf/

- Yu, Y., Afanasyev, A., Clark, D., claffy, k., Jacobson, V., and Zhang, L.; Schematizing trust in named data networking; In Proceedings of the 2nd ACM Conference on Information-Centric Networking (New York, NY, USA, 2015), ACMICN ’15, Association for Computing Machinery; https://dl.acm.org/doi/10.1145/2810156.2810170

Distributed Computing in Information-Centric Networking

This is an introduction to our paper:

- Wei Geng, Yulong Zhang, Dirk Kutscher, Abhishek Kumar, Sasu Tarkoma, Pan Hui; Sok: Distributed Computing in ICN; 10th ACM Conference on Information-Centric Networking (ACM ICN '23); October 9 — 10, 2023, Reykjavik, Iceland; https://doi.org/10.1145/3623565.3623712; pre-print available at https://arxiv.org/abs/2309.08973.

Distributed computing is the basis for all relevant applications on the Internet. Based on well-established principles, different mechanisms, implementations, and applications have been developed that form the foundation of the modern Web.

The Internet with its stateless forwarding service and end-to-endcommunication model promotes certain types of communication for distributed computing. For example, IP addresses and/or DNS names provide different means for identifying computing components. Reliable transport protocols (e.g., TCP, QUIC) promote interconnecting modules. Communication patterns such as REST and protocol implementations such as HTTP enable certain types of distributed computing interactions, and security frameworks such as TLS and the web PKI constrain the use of public-key cryptography for different security functions.

From Distributed Computing...

Distributed computing has different facets, for example, client-server computing, web services, stream processing, distributed consensus systems, and Turing-complete distributed computing platforms. There are also different perspectives on how distributed computing should be implemented on servers and network platforms, a research area that we refer to as Computing in the Network. Active Networking, one of the earliest works on computing in the network, intended to inject programmability and customization of data packets in the network itself; however, security and complexity considerations proved to be major limiting factors, preventing its wider deployment.

Dataplane programmability refers to the ability to program behavior, including application logic, on network elements and SmartNICs, thus enabling some form in-network computing. Alternatively, different types of server platforms and light-weight execution environments are enabling other forms of distributing computation in networked systems, such as architectural patterns, such as edge computing.

... To Computing in the Network

With currently available Internet technologies, we can observe a relatively succinct layering of networking and distributed computing, i.e., distributed computing is typically implemented in overlays with Content Distribution Networks (CDNs) being prominent and ubiquitous example. Recently, there has been growing interest in revisiting this relationship, for example by the IRTF Computing in the NetworkResearch Group (COINRG) – motivated by advances in network and server platforms, e.g., through the development of programmable data plane platforms and the development of different types of distributed computing frameworks, e.g., stream processing and microservice frameworks.

This is also motivated by the recent development of new distributed computing applications such as distributed machine learning (ML), and emerging new applications such as Metaverse suggest new levels of scale in terms of data volume for distributed computing and the pervasiveness of distributed computing tasks in such systems. There are two research questions that stem from these developments:

-

How can we build distributed computing systems in the network that can leverage the on-path location of compute functions, e.g., optimally aligning stream processing topologies with networked computing platform topologies?

-

How can the network support distributed computing in general, so that the design and operation of such systems can be simplified, but also so that different optimizations can be achieved to improve performance and robustness?

Issues in Legacy Distributed Computing

Although there are many distributed computing applications, it is also worth noting that there are many limitations and performance issues. Factors such as network latency, data skew, checkpoint overhead, back pressure, garbage collection overhead, and issues related to performance, memory management, and serialization and deserialization overhead can all influence the efficiency. Various optimization techniques can be implemented to alleviate these issues, including memory adjustment, refining the checkpointing process, and adopting efficient data structures and algorithms.

Some performance problems and complexity issues stem from the overlay nature of current systems and their way of achieving the above-mentioned mechanisms with temporary solutions based on TCP/IP and associated protocols such as DNS. For example, Network Service Mesh has been characterized as architecturally complex because of the so-called sidecar approaches and their implementation problems.

In systems that are layered on top of HTTP or TCP (or QUIC), compute nodes typically cannot assess the network performance directly – only indirectly through observed throughput and buffer under-runs. Information-centric data-flow systems, such as IceFlow, intend to provide better visibility and thus better joint optimization potential by more direct access to data-oriented communication resources. Then, some coordination tasks that are based on exchanging updates of shared application state can be elegantly mapped to named data publication in a hierarchical namespace, as the different dataset synchronization (Sync) protocols in NDN demonstrated.

Information-Centric Distributed Computing

In our paper on Distributed Computing in ICN at ACM ICN-2023, we focus on distributed computing and on how information-centricity in the network and application layer can support the development and operation of such systems. The rich set of distributed computing systems in ICN suggests that ICN provides some benefits for distributed computing that could offer advantages such as better performance, security, and productivity when building corresponding applications.

ICN with its data-oriented operation and generally more powerful forwarding layer provides an attractive platform for distributed computing. Several different distributed computing protocols and systems have been proposed for ICN, with different feature sets and different technical approaches, including Remote Method Invocation (RMI) as an interaction model as well as more comprehensive distributed computing platforms. RMI systems such as RICE leverage the fundamental named-based forwarding service in ICN systems and map requests to Interest messages and method names to content names (although the actual implementation is more intricate). Method parameters and results are also represented as content objects, which provides an elegant platform for such interactions.

ICN generally attempts to provide a more useful service to data-oriented applications but can also be leveraged to support distributed computing specifically.

Names

Accessing named data in the network as a native service can remove the need for mapping application logic identifiers such as function names to network and process identifiers (IP addresses, port numbers), thus simplifying implementation and run-time operation, as demonstrated by systems such as Named Function Networking (NFN), RICE, and IceFlow. It is worth noting that, although ICN does not generally require an explicit mapping of names to other domain identifiers, such networks require suitable forwarding state, e.g., obtained from configuration, dynamic learning, or routing.

Data-orientedness

ICN's notion of immutable data with strong name-content binding through cryptographic signatures and hashes seems to be conducive to many distributed computing scenarios, as both static data objects and dynamic computation results in those systems such as input parameters and result values can be directly sent as ICN data objects. NFN has first demonstrated this.

Securing distributed computing could be supported better in so far as ICN does not require additional dependencies on public-key or pipe securing infrastructure, as keys and certificates are simply named data objects and centralized trust anchors are not necessarily needed. Larger data collections can be aggregated and re-purposed by manifests (FLIC), enabling "small" and "big data" computing in one single framework that is congruent to the packet-level communication in a network. IceFlow uses such an aggregation approach to share identical stream processing results objects in multiple consumer contexts.

Data-orientedness eliminates the need for connections; even reliable communication in ICN is completely data-oriented. If higher-layer (distributed computing) transactions can be mapped to the network layer data retrieval, then server complexity can be reduced (no need to maintain several connections), and consumers get direct visibility into network performance. This can enable performance optimizations, such as linking network and computing flow control loops (one realization of joint optimization), as showed by IceFlow.

Location independence and data sharing

Embracing the principle of accessing named and authenticated data also enables location independence, i.e., corresponding data can be obtained from any place in the network, such as replication points (repos) and caches. This fundamentally enables better multi-source/path capabilities as well as data sharing, i.e., multiple data retrieval operations for one named data object by different consumers can potentially be completed by a cache, repo, or peer in the network.

Stateful Forwarding

ICN provides stateful, symmetric forwarding, which enables general performance optimizations such as in-network retransmissions, more control over multipath forwarding, and load balancing. This concept could be extended to support distributed computing specifically, for example, if load balancing is performed based on RTT observations for idempotent remote-method invocations.

More Networking, less Management

The combination of data-oriented, connection-less operation, and stateful (more powerful) forwarding in ICN shifts functionality from management and orchestration layers (back) to the network layer, which can enable complexity reduction, which can be especially pronounced in distributed computing. For example, legacy stream processing and service mesh platforms typically must manage connectivity between deployment units (pods in Kubernetes). In Apache Flink, a central orchestrator manages the connections between task managers (node agents). Systems such as IceFlow have demonstrated a more self-organized and decentralized stream-processing approach, and the presented principles are applicable to other forms of distributed computing.

In summary, we can observe that ICN's general approach of having the network providing a more natural (data retrieval) platform for applications benefits distributed computing in similar ways as it benefits other applications. One particularly promising approach is the elimination of layer barriers, which enables certain optimizations.

In addition to NFN, there are other approaches that jointly optimize the utilization of network and computing resources to provide network service mesh-like platforms, such as edge intelligence using federated learning, advanced CDNs where nodes can dynamically adapt to user demands according to content popularity, such as iCDN and OpenCDN, and general computing systems, such as Compute-First Networking, IceFlow, and ICedge.

Our paper on Distributed Computing in ICN at ACM ICN-2023 provides a comprehensive analysis and understanding of distributed computing systems in ICN, based on a survey of more than 50 papers. Naturally, these different efforts cannot be directly compared due to their difference in nature. We categorized different ICN distributed computing systems, and individual approaches and highlighted their specific properties.

The scope of this study is technologies for ICN-enabled distributed computing. Specifically, we divide the different approaches into four categories, as shown in the figure above: enablers, protocols, orchestration, and applications. The contributions of this study are as follows:

- A discussion of the benefits and challenges of distributed computing in ICN.

- A categorization of different proposed distributed computing systems in ICN.

- A discussion of lessons learned from these systems.

- A discussion of existing challenges and promising directions for future work.

Recent Research on Distributed Computing in ICN

I am providing some pointers to my previous research on distributed computing in ICN below.

The paper that has led to this article:

- Wei Geng, Yulong Zhang, Dirk Kutscher, Abhishek Kumar, Sasu Tarkoma, Pan Hui; Sok: Distributed Computing in ICN; 10th ACM Conference on Information-Centric Networking (ACM ICN '23); October 9 — 10, 2023, Reykjavik, Iceland; https://doi.org/10.1145/3623565.3623712; pre-print available at https://arxiv.org/abs/2309.08973.

Current work in the Computing in the Network Research Group of the IRTF:

- Dirk Kutscher, Teemu Kärkkäinen, Jörg Ott; Directions for Computing in the Network; Internet Draft draft-irtf-coinrg-dir-00, Work in Progress; August 2023

Reflexive Forwarding and Remote Method Invocation

Providing a unified remote computation capability in ICN presents some unique challenges, among which are timer management, client authorization, and binding to state held by servers, while maintaining the advantages of ICN protocol designs like CCN and NDN. In the RICE work,we developed a unified approach to remote function invocation in ICN that exploits the attractive ICN properties of name-based routing, receiver-driven flow and congestion control, flow balance, and object-oriented security while presenting a natural programming model to the application developer. The RICE protocol is leveraging an ICN extension called Reflexive Forwarding that provides ICN-idiomatic method parameter transmission.

- RICE: Remote Method Invocation in ICN (best paper award at ACM ICN-2018)

- Reflexive Forwarding in ICN

Distributed Computing Frameworks

Leveraging RICE as a mechanism, we have developed Compute-First Networking (CFN) in ICN, a Turing-complete distributed computing platform. IceFlow is a proposal for Dataflow in ICN in a decentralized manner.

- Compute-First Networking (CFN): Distributed Computing Meets ICN

- IceFlow: Information-Centric Dataflow: Re-Imagining Reactive Distributed Computing

Applications

Based on Reflexive Forwarding, we have developed a concept for RESTful ICN that leverages CCNx key exchange for setting up security contexts and keys that could then be used for secure, data-oriented REST-like communication.

Delay-Tolerant LoRa leveraged Reflexive Forwarding to enable constrained LoRa nodes to "phone home" when they want to transmit data, thus enabling new ways (without central network and application servers) for connecting LoRa networks to the Internet.

Reflexive Forwarding in Named Data Networking

Current Information-Centric Networking protocols such as CCNx and NDN have a wide range of useful applications in content retrieval and other scenarios that depend only on a robust two-way exchange in the form of a request and response (represented by an Interest-Data exchange in the case of the two protocols noted above). A number of important applications however, require placing large amounts of data in the Interest message, and/or more than one two-way handshake.

While these can be accomplished using independent Interest-Data exchanges by reversing the roles of consumer and producer, such approaches can be both clumsy for applications and problematic from a state management, congestion control, or security standpoint. Reflexive Forwarding is a proposed extension to the CCNx and NDN protocol architectures that eliminates the problems inherent in using independent Interest-Data exchanges for such applications.

The protocol is specified in draft-oran-icnrg-reflexive-forwarding and has been used in a few of our research projects such as:

- RICE: Remote Method Invocation in ICN (best paper award at ACM ICN-2018)

- Compute-First Networking (CFN): Distributed Computing Meets ICN

- RESTful ICN

- Delay-Tolerant LoRa ICN Networking

My student intern Xinchen Jin from ShanghaiTech has implemented the Reflexing Forwarding specification in NDN (with modifications to ndn-cxx and NFD) and set up a testbed in mini-NDN for experiments over multiple forwarders.