Archive for the ‘Publications’ Category

Data-oriented, Decentralized, Daring: Opportunities and Research Challenges for an Information-Centric Web

Research and development in ICN has led to different communication patterns such as Sync and API implementations such as CNL. It is now time to think about how to leverage Information-Centric principles for providing better foundations for hypermedia applications in the future web. At NDNComm-2024 I talked about how ICN could possibly help, what could be fruitful future research directions, and why web3 and dweb are not the answer.

Material

Content Retrieval on the Decentralised Web

Trends and Emerging Technologies for Content Retrieval on the Decentralized Web

The control, governance, and management of the web have become increasingly centralised, resulting in security, privacy, and censorship concerns. Decentralised initiatives have emerged to address these issues, beginning with decentralised file systems. These systems have gained popularity, with major platforms serving millions of content requests daily. Complementing the file systems are decentralised search engines and name registry infrastructures, together forming the basis of a decentralised web. We have published a survey paper that analyses research trends and emerging technologies for content retrieval on the decentralised web, encompassing both academic literature and industrial projects.

Challenges

Several challenges hinder the realisation of a fully decentralised web. Achieving comparable performance to centralised systems without compromising decentralisation is a key challenge. Hybrid infrastructures, blending centralised components with verifiability mechanisms, show promise to improve decentralised initiatives. While decentralised file systems have seen more mature deployments, they still face challenges such as usability, performance, privacy, and content moderation. Integrating these systems with decentralised name-registries offers a potential for improved usability with human-readable and persistent names for content. Further research is needed to address security concerns in decentralised name-registries and enhance governance and crypto-economic incentive mechanisms.

References

Navin V. Keizer, Onur Ascigil, Michał Król, Dirk Kutscher, and George Pavlou; A Survey on Content Retrieval on the Decentralised Web; ACM Computing Surveys; March 2024; https://doi.org/10.1145/3649132

Towards a Unified Transport Protocol for In-Network Computing in Support of RPC-based Applications

The emerging term In-Network Computin (INC) [inc] in particular refers applying on-path programmable networking devices (e.g., switches and routers between clients and servers) as an accelerator or function offloader to boost throughput, reduce server load, or improve latency, typically in a well-controlled data center network environment.

Some INC implementations evolved from programmable data plane systems and align with the trend of network programmability at large. In recent year, it has been shown to support many promising applications (e.g., caching, aggregation, and agreement). For example, in distributed machine learning (DML), training nodes produce data (gradients) that needs to be aggregated or reduced -- and the result could be distributed to one or multiple consumers. As another example, the NetClone system [netclone] uses in-network forwarder to replicate RPC invocation messages and to perform more informed forwarding based on observed latencies for accelerating RPC communication.

While it is possible to achieve this kind of operation purely with end-to-end communication between worker nodes, performance can be dramatically improved by offloading both the operation processing and the data dissemination to nodes in the network. These in-network processors are often conceived as semi-transparent performance enhancing on-path elements, i.e., they are not the actual endpoints in transport protocol sessions and would intercept packets with application data and potentially generate new data that they would have to transmit.

In our Internet Draft draft-song-inc-transport-protocol-req-01.txt, we are discussing this problem and are formulating some requirements for the design of future transport protocols in this space.

References

- Collective Communication: Better Network Abstractions for AI

- Computing in the Network – Lessons Learned and New Opportunities

- [I-D.yao-tsvwg-cco-problem-statement-and-usecases] Yao, K., Shiping, X., Li, Y., Huang, H., and D. KUTSCHER, "Collective Communication Optimization: Problem Statement and Use cases", Work in Progress, Internet-Draft, draft-yao-tsvwg-cco-problem-statement-and-usecases-00, 23 October 2023, https://datatracker.ietf.org/doc/html/draft-yao-tsvwg-cco-problem-statement-and-usecases-00.

- [inc] Klenk et al., B., "An In-Network Architecture for Accelerating Shared-Memory Multiprocessor Collectives", ACM/IEEE 47th Annual International Symposium on Computer Architecture (ISCA), 2020, <https:dx.doi.org/10.1109/ISCA45697.2020.00085>

- [netclone] Kim, G., "NetClone: Fast, Scalable, and Dynamic Request Cloning for Microsecond-Scale RPCs", In Proceedings of the ACM SIGCOMM 2023 Conference (ACM SIGCOMM '23). Association for Computing Machinery, New York, NY, USA, 195-207, 2023 https://dl.acm.org/doi/10.1145/3603269.3604820

AINTEC-2023

It was nice to meet new and old friends at the recent Asian Internet Engineering Conference (AINTEC) in Hanoi last week.

I had the pleasure of contributing three elements to the program:

AINTEC Panel on 6G Research

I had the pleasure of moderating a on panel 6G Research Challenges at AINTEC-2023. The panelists were Serge Fdida, Abhimanyu Gosain, Jim Kurose, and George Michaelson.

Opportunities and Challenges for Future Network Systems Design?

The panel was discussing opportunities and challenges for future network systems design and tried to shed some light on what 6G might actually mean and what interesting research could and should be done.

5G Hype vs Reality

While many people are speculating about possible 6G features, it is quite instructive to review the adoption of current 5G technology. The panel discussed this from different perspectives. It was noted that quite many advanced 5G features, although specified, are not yet available, such as new core designs, low latency communication, positioning, and network slicing.

There may be different reasons for that. One reason that was mentioned the lack of demand. 5G seems to be mostly used as a reasonably fast bitpipe, i.e., as an access technology for mobile broadband. Economically, this means that it is difficult to monetize the network beyond that.

The panel discussed whether WiFi and 5G will integrate as just two "localized" link-level wireless technologies at the Internet edge, or whether 5G will actually provide a global end-to-end network, interconnected to the Internet.

Centralization and new Deployment Models

Another interesting topic is the evolution of deployment models and the changing nature of service provider and infrastructure providers. Not only are hyperscalers providing most of the "over-the-top" functionality and infrastructure today, they are also increasingly providing the cloud infrastructure and telco software functions, such as Microsoft with their "Azure for Operators" platform. The panel also discussed the issues of commercial consolidation and concentration in this regard.

Key Enablers for 6G

We discussed potential key enables for 6G, and the following topics were mentioned:

- AI/ML Native Interface

- New Spectrum Technologies: 7-24 GHz, 300GHz-1THz

- Networking as a Sensor: Shift from Radio KPI to system and service focused

- Communication-Compute-Data Centric

- Zero Trust Architecture (ZTA): Security and Trust

- Open Radio Access Networks

With respect to "Communication-Compute-Data-Centricity", we discussed whether it would be the mobile network infrastructure that would provide features in this direction, e.g., a better integration of computing and networking, or whether the network would just provide the access service, and computing etc. would continue being an application (also see my invited talk on computing in the network at AINTEC-2023). The panel expressed some preference for maintaing a separation of concerns, layering and the end-to-end principle.

Another topic that was discussed was the continuing "softwarization" and the application of Software-Defined Networking (SDN) principles. Future systems may see some more management support for applications (and application-related infrastructure), and there is certainly a trend towards more autonomous management and the use of machine learning for that.

References

- Azure for operators

- Tim Wu; The Master Switch: The Rise and Fall of Information Empires; Columbia Law School; 2010

Computing in the Network – Lessons Learned and New Opportunities

The Internet is a distributed system that enables distributed computing applications, from client-server web applications to collaborative multi-media applications. The evolution of both compute server and network infrastructure platforms has fueled the development of new approaches for building more programmable networks and of application support functions in the network.

At the same time, new applications such as IoT data processing, distributed machine learning, decomposed application architectures such as Microservice and distributed computing frameworks introduce new opportunities for the development of more principled approaches towards Computing in the Network.

In my invited talk at AINTEC-2023, I reviewed some promising use cases, highlighted recent relevant research results and discussed several research challenges for conceiving Computing in the Network from an Internet perspective, for example discussing the meaning of "end-to-end communication" and "permissionless innovation" in the light of these new developments.

From "In-Network Computing"...

"In-Network Computing" is a popular but also relatively poorly defined term that comes up a lot in recent research studies. I discussed the different facets such as traditional networked computing, middlebox-like packet processing, active networking, programmable dataplane, Network Functions Virtualization and Service Function Chaning as depicted in the figure below.

In general, we can distinguish two main directions:

- Computing on the Network: general distributed computing using Internet technologies for communication, such as the Web and related overlay networks such as CDNs.

- Middlebox-like packet processing: intercepting, manipulating, generating, and steering packets has been applied to production networks in data centers and telco networks, often as a performance enhancing approach.

What about Programmable Data Plane?

Programmable Data Plane approaches such as the P4 programming language are often used to implement certain elements of either of these two categories, for example, traffic steering, load balancing etc. There are some point solutions for more application-layer-oriented functionalities such as NetCache, support for distributed consensus protocols, support for distributed machine learning training etc., but these tyically operate under very specific assumptions, and are often at odds with end-to-semantics and security. One example of a productive use of Programmable Data Plane in my opinion was the SIGCOMM-2023 paper on NetClone: Fast, Scalable, and Dynamic Request Cloning for Microsecond-Scale RPCs by Gyuyeong Kim. In this work, programmable switches were used to implemenent request forwarding strategies based on relatively simple packet meta information and observed performance, i.e., without requiring application layer knowledge.

... To "Computing in the Network"

There are many relevant use cases of distributed computing that can benefit from (and urgently need) support from networking and where distributing processing, aggregation etc. with awareness of network topologies, current utilization etc. would make a real difference. We have earlier built such a system and called it Compute-First Networking: Distributed Computing meets ICN (see https://dirk-kutscher.info/publications/distributed-computing-icn/ for background).

I talked about relevant applications such as distributed stream processing, and distributed machine learning. Today, these systems are typically run on the network but could definitely benefit from a better support and from better awareness of the network – so I asked the question whether there is the possibility for a confluence of existing and emerging capabilities of modern hardware and the requirements of relevant distributed computing applications.

Questions I raised included:

- How can we conceive such a confluence?

- How can we support distributed computing without giving up layering and principles such as the end-to-end principle?

- What features do we need from transport protocols to support diverse use cases?

Distributed Machine Learning

Distributed machine learning, e.g., federated learning, is an application that is currently perceived as a major driver for in-network computing. Large-scale training networks are expected to enable higher degrees of parallelization and handling of larger model sizes. How would we run such workloads as distributed systems, within data centers but potentially also across the Internet?

It is important to understand the performance requirements of such systems. Initial systems were build with bespoke High-Performance Computing (HPC) architectures and communication technologies such as Infiniband. Such systems used in-network aggregation functions and defined corresponding architectures such as SHArP.

Today's data center systems employ RDMA and RDMA over Ethernet (RoCE) as low-layer abstraction for efficient packet-based communication on layer 2, without addressing higher layer transport and system design aspects.

Collective Communications

In parallel computing architectures, Message Passing Interface (MPI) is typically used to provide efficient and portable inter-process communication for high-performance computing. One of the concepts developed in MPI is Collective Communication, a set of bespoke data aggregation and distribution patterns for different data-oriented distributed computing scenarios, such as:

- Broadcasting, e.g., for distributing configuration data or common ML models

- Scattering: single process involves a single process sending distinct pieces of data to each process

- Gathering: one process collecting and combining data pieces from other processes

- All-to-all communications: every process sends data to every other processes

- Reduction: collect data from all processes, aggregate and send result

Today's Collective Communication implementations are implementing these patterns for different underlaying networks and inter-process facilities. For GPU-based Collective Communications in today's networks, often a ring-based communication is applied, leading to quite some inefficiencies with respect to communication overhead and idle times of the different processors. See this presentation from Tencent at the recent AIDC side meeting at IETF-118. Other implementations use peer-to-peer communication models.

Collective Communication in the Network

From a networking perspective, the question is how to map collective communication better to Internet technology-based networked systems, avoiding unnessary duplication, providing typical transport protocol features such as reliability and congestion control, and enabling an optimal placement of corresponding aggregation functions.

This would incur a set of challenges such as

- Transport

- Reliability: underlying network lacks communication reliability

- Application data units instead of packets

- Blocking & non-blocking communication modes

- Security (potentially)

- Multi-destination delivery

- IP-Multicast possibly not the best fit

- Computing in the Network Framework

- Generic operations as primitives (at least per application domain)

- Stringent performance requirement

- Control, Optimizations, Management

- Topology and utilization awareness

- Scheduling communication and computation for optimal performance

We discussed these challenges in two recently submitted Internet Drafts on Transport for Collective Communications, and I discussed these issues in more detail during the talk.

Data-Oriented Collective Communications

I proposed the direction of data-oriented Collective Communication and discussed how concepts from Information-Centric distributed computing could possibly employed to achieve efficient and practical multi-destination transport, reliability and congestion control, and flexible placement of aggregation functions with a name-based identity scheme.

Promising features would include:

- Data-oriented communication model

- Locator-less model conducive to data production and consumption at different places in the network (computing)

- Multi-destination delivery included

- In-network retransmission and caching could help with reliability and performance

However, I also mentioned some challenges:

- Receiver-driven transport results in polling – efficient enough?

- RDMA-like communication unexplored

- Security concept: data-oriented security good – unclear whether it can be afforded

- Exact scheduling may be at odds with current ICN system design – more work needed

In summary, this seems to be rich field for future systems research. Distributed machine learning drives the development of new concepts for communication and computing. It clearly needs efficient multi-destination communication and an efficient mapping of MPI-inspired Collective Communication. The current abstractions do not fit well, and pure IP packet level communication is too limited. Connection-oriented transport seems to be at odds with the communication semantics, which makes data-oriented communication attractive. Such an approach could work with a name-based approach, i.e., without addresses, which is conducive to data production and consumption. Certainly, the challenging performance requirements call for more research and possibly evolution of current ICN protocols.

References

- [CFN-ICN] Compute-First Networking: Distributed Computing meets ICN

- [DISTCOMPICN] Distributed Computing in ICN

- [IETFCollectiveCommunications] Collective Communication: Better Network Abstractions for AI

- [IETF118AIDC] Side meeting at IETF-118 on AI in Data Centers

- [IETF118CC] Side meeting at IETF-118 on Collective Communications

- [NETCLONE] NetClone: Fast, Scalable, and Dynamic Request Cloning for Microsecond-Scale RPCs

- [RoCE] RDMA over Ethernet (RoCE)

- [SHARP] Richard L. Graham, Devendar Bureddy, Pak Lui, Hal Rosenstock, Gilad Shainer, Gil Bloch, Dror Goldenerg, Mike Dubman, Sasha Kotchubievsky, Vladimir Koushnir, Lion Levi, Alex Margolin, Tamir Ronen, Alexander Shpiner, Oded Wertheim, and Eitan Zahavi. 2016. Scalable hierarchical aggregation protocol (SHArP): a hardware architecture for efficient data reduction. In Proceedings of the First Workshop on Optimization of Communication in HPC (COM-HPC '16). IEEE Press, 1–10.

Cornerstone: Automating Remote NDN Entity Bootstrapping

We published a paper on automated remote bootstrapping in Named Data Networking (NDN):

Tianyuan Yu, Xinyu Ma, Hongcheng Xie, Dirk Kutscher, Lixia Zhang; Cornerstone: Automating Remote NDN Entity Bootstrapping; Asian Internet Engineering Conference (AINTEC) 2023; December 2023; doi/10.1145/3630590.3630598

Abstract

To secure all communications, Named Data Networking (NDN) requires that each entity joining an NDN network go through a bootstrapping process first, to obtain its initial security credentials. Several solutions have been developed to bootstrap IoT devices in localized environments, where the devices being bootstrapped are within the physical reach of their bootstrapper. However, distributed applications need to bootstrap remote users and devices into an NDN-based system over insecure Internet connectivity. In this work, we take Hydra, a federated distributed file storage system made of servers contributed by multiple participating organizations, as a use case to drive the design and development of a remote bootstrapping solution, dubbed Cornerstone. We describe the design of Cornerstone, evaluate its effectiveness, and discuss the lessons learned from this process.

Rethinking LoRa for the IoT: An Information-centric Approach

We just published our IEEE Communications Magazine article on Rethinking LoRa for the IoT with an Open Access license.

LoraWAN and the Internet

Internet of Things (IoT) interconnects numerous sensors and actuators either locally or across the global Internet. From an application perspective, IoT systems are inherently data-oriented, their purpose is often to provide access to named sensor data and control interfaces. From a device and communication perspective, things in the IoT are resource-constrained devices that are commonly powered by a small battery and communicate wirelessly.

LoRaWAN systems today integrate the LoRa physical layer with the LoRaWAN MAC layer and corresponding infrastructure support. Among the IoT radio technologies, LoRa is a versatile and popular candidate since it provides a physical layer that allows for data transmission over multiple kilometers with minimal energy consumption. At the same time, the high LoRa receiver sensitivity enables packet reception in noisy environments, which makes it attractive for industrial deployments. On the downside, LoRa achieves only low data rates requiring long on-air times, and significantly higher latencies compared to radios that are typically used for Internet access.

LoraWAN MAC Layer

The LoRaWAN MAC layer and network architecture that is often used in LoRa deployments, thus provide a vertically integrated sensor data delivery service on top of the LoRa PHY that implements media access and end-to-end network connectivity. Unfortunately, LoRaWAN cannot utilize the LoRa PHY to its best potential with respect to throughput and robustness and is mostly used for upstream-only communication. It is not intended to directly interconnect with the Internet, but relies on a bespoke middlebox architecture consisting of gateways and network servers. Overall LoRaWAN has the following main problems, as depicted in the figure below.

- Centralization around a network server prevents data sharing between users, across distributed applications, and requires permanent infrastructure backhaul of the wireless access network.

- Uplink-oriented and uncoordinated communication leads to wireless interference. Downlink traffic is rarely available in practice and suffers from scalability issues.

Data-centric Delay-tolerant End-to-End Communication over the Internet

This paper presents an overview about recent advancements to enable data-centric, long-range IoT communication based on LoRa. The proposed network system aims for delay-tolerant, bi-directional communication in the presence of vastly longer latencies and lower bandwidth compared to regular Internet systems – without relying on vertically integrated middlebox-based architectures. The resulting system resolves current LoRaWAN performance issues using two main building blocks: a new network layer based on Information-centric Networking (ICN) and a new MAC layer.

Originally designed for non-constrained wired networks to abandon the end-to-end paradigm and access data only by names instead of IP endpoints, ICN migrated to the constrained wireless IoT over the past years. ICN still lacks a lower layer definition but provides mechanisms that are beneficial for the challenging LoRa domain: Decoupling of content from endpoints separates data access from physical infrastructure. Inherent content caching and replication potentially reduce link load, thus, wireless interference, and it preserves battery resources. The ICN-LoRa system presented in this paper bases its design on IEEE 802.15.4 DSME which was originally designed for low-power personal area networks. This MAC handles media access reliably using time- and frequency multiplexing, and enables reliable bi-directional communication.

Synergizing the advantages of LoRa, DSME, and ICN enables delay-tolerant, bi-directional LoRa communication, wich enhances many existing IoT applications. Wide area data retrieval and control as for solar power stations or smart street lighting systems are facilitated by the new MAC and its ICN integration. High voltage overhead line monitoring connecting voltage sensors and transformers relies on high data reliability, even under intermittent connectivity or loss. ICN achieves this, employing content caching and replication. Traveling container monitoring (RFC 7744) is challenging due to mobility and interference from metallic surfaces, where LoRa surpasses other radio systems. Decoupling content from its location for mobile containers and an adaptation to long producer delays are naturally contributed by LoRa-ICN.

Results

In our paper, we provide the essential technical background and challenges to design a LoRa-ICN system. We identify the key performance potentials of five protocol variants based on an implementation in RIOT OS and experiments on off-the-shelf IoT devices.

LoRa is an attractive radio technology for the IoT, providing a long wireless transmission range for battery-driven devices. Its versatility is hindered, though, by common deployments with LoRaWAN. We re-visited LoRa in the IoT to provide a serverless, data-oriented communication service. We presented the design of a new media access and network layer that leverages 802.15.4 DSME and Information-centric Networking to allow for reliable LoRa transmissions. To scale to a global Internet (of Things), LoRa-ICN facilitates ubiquitous connectivity of constrained nodes and robust bi-directional communication in the presence of power-saving regimes and high loss rates.

We showed that vastly higher latencies in low-power wireless domains can be addressed by extending the default ICN node behavior at the network edge. Two protocol extensions enable ICN-style data transport between resource-constrained LoRa nodes and a domain-agnostic application on the ICN Internet. The core idea is not limited to LoRa but caters to various delay-prone scenarios. Our experiments based on common IoT hardware and software showed significant performance improvements and further optimization potential compared to Vanilla ICN.

The new LoRa-ICN system paves the way for more versatile LoRa deployments in the IoT that serve additional use cases, mixed sensor-actor topologies, or firmware updates utilizing beacon overloading.

References

This article

- P. Kietzmann, J. Alamos, D. Kutscher, T. C. Schmidt and M. Wählisch, Rethinking LoRa for the IoT: An Information-centric Approach in IEEE Communications Magazine, doi: 10.1109/MCOM.001.2300379.

Reflexive forwarding

The ICN communication mechanisms this work is based on.

- Reflexive Forwarding in NDN

- Oran, D. R. and D. Kutscher; Reflexive Forwarding for CCNx and NDN Protocols; Work in Progress; Internet-Draft draft-oran-icnrg-reflexive-forwarding-05; 26 March 2023

In-depth publications this work is based on

- Peter Kietzmann, José Alamos, Dirk Kutscher, Thomas C. Schmidt, and Matthias Wählisch. 2022. Delay-tolerant ICN and its application to LoRa. In Proceedings of the 9th ACM Conference on Information-Centric Networking (ICN '22). Association for Computing Machinery, New York, NY, USA, 125–136. https://doi.org/10.1145/3517212.3558081

- P. Kietzmann, J. Alamos, D. Kutscher, T. C. Schmidt and M. Wählisch, Long-Range ICN for the IoT: Exploring a LoRa System Design, 2022 IFIP Networking Conference (IFIP Networking), Catania, Italy, 2022, pp. 1-9, doi: 10.23919/IFIPNetworking55013.2022.9829792. https://ieeexplore.ieee.org/document/9829792

- José Álamos, Peter Kietzmann, Thomas C. Schmidt, and Matthias Wählisch. 2022. DSME-LoRa: Seamless Long-range Communication between Arbitrary Nodes in the Constrained IoT. ACM Trans. Sen. Netw. 18, 4, Article 69 (November 2022), 43 pages. https://doi.org/10.1145/3552432

Collective Communication: Better Network Abstractions for AI

We have submitted two new Internet Drafts on Collective Communication:

-

Kehan Yao , Xu Shiping , Yizhou Li , Hongyi Huang , Dirk Kutscher; Collective Communication Optimization: Problem Statement and Use cases; Internet Draft draft-yao-tsvwg-cco-problem-statement-and-usecases-00; work in progress; October 2023

-

Kehan Yao , Xu Shiping , Yizhou Li , Hongyi Huang , Dirk Kutscher; Collective Communication Optimization: Requirement and Analysis; Internet Draft draft-yao-tsvwg-cco-requirement-and-analysis-00; work in progress; October 2023

Collective Communication refers to communication between a group of processes in distributed computing contexts, for example involving interaction types such as broadcast, reduce, all-reduce. This data-oriented communication model is employed by distributed machine learning and other data processing systems, such as stream processing. Current Internet network and transport protocols (and corresponding transport layer security) make it difficult to support these interactions in the network, e.g., for aggregating data on topologically optimal nodes for performance enhancements. These two drafts discuss use cases, problems, and initial ideas for requirements for future system and protocol design for Collective Communication. They will be discussed at IETF-118.

Network Abstractions for Continuous Innovation

In a joint panel at ACM ICN-2023 and IEEE ICNP-2023 in Reykjavik, Ken Calvert, Jim Kurose, Lixia Zhang, and myself discussed future network abstractions. The panel was moderated by Dave Oran. This was one of the more interesting and interactive panel sessions I participated in, so I am providing a summary here.

Since the Internet's initial rollout ~40 years ago, not only its global connectivity has brought fundamental changes to society and daily life, but its protocol suite and implementations have also gone through many iterations of changes, with SDN, NFV, and programmability among other changes over the last decade. This panel looks into next decade of network research by asking a set of questions regarding where lies the future direction to enable continued innovations.

Opportunities and Challenges for Future Network Innovations

Lixia Zhang: Rethinking Internet Architecture Fundamentals

Lixia Zhang (UCLA), quoting Einstein, said that the formulation of the problem is often more essential than the solution and pointed at the complexities of today's protocols stacks that are apparently needed to achieve desired functionality. For example, Lixia mentioned RFC 9298 on proxying UDP in HTTP, specifically on tunneling UDP to a server acting as a UDP-specific proxy over HTTP. UDP over IP was once conceived as a minial message-oriented communication service that was intended for DNS and interactive real-time communication. Due to its push-based communication model, it can be used with minimal effort for useful but also harmful application, including large-scale DDOS attacks. Proxing UDP over HTTP addresses this and other concerns, by providing a secure channel to a server in a web context, so that the server can authorize tunnel endpoints, and so that the UDP communication is congestion controlled by the underlying transport protocol (TCP or QUIC). This specification can be seen as a work-around: sending unsolicted (and un-authenticated) messages over the Internet is a major problem in today's Internet. There is no general approach for authenticating such messages and no concept for trust in peer identities. Instead of analyzing the root cause of such problems, the Internet communities (and the dominant players in that space) prefer to come up with (highly inefficient) workarounds.

This problem was discussed more generally by Oliver Spatscheck of AT&T Labs in his 2013 article titled Layers of Success, where he discussed the (actually deployed) excessive layering in production networks, for example mobile communication networks, where regular Internet traffic is routinely tunneled over GTP/UDP/IP/MPLS:

The main issue with layering is that layers hide information from each other. We could see this as a benefit, because it reduces the complexities involved in adding more layers, thus reducing the cost of introducing more services. However, hiding information can lead to complex and dynamic layer interactions that hamper the end-to-end system’s reliability and are extremely difficult if not impossible to debug and operate. So, much of the savings achieved when introducing new services is being spent operating them reliably.

According to Lixia, the excessive layering stems from more fundamental problems with today's network architecture, notably the lack of identity and trust in the core Internet protocols and the lack of functionality in the forwarding system – leading to significant problems today as exemplied by recent DDoS attacks. Quoting Einstein again, she said that we cannot solve problems by using the same kind of thinking we used when we created them, calling for a more fundamental redesign based on information-centric networking principles.

Ken Calvert: Domain-specific Networking

Ken Calvert (University of Kentucky) provided a retrospective of networking research and looked at selected papers published at the first IEEE ICNP conference in 1993. According to Ken, the dominant theme at that time was How to design, build, and analyze protocols, for example as discussed in his 1993 ICNP paper titled Beyond layering: modularity considerations for protocol architectures.

Ken offered a set of challenges and opportunities for future networking research, such as:

- Domain-specific networking à la Ex uno pluria, a 2018 CCR editorial discussing:

- infrastructure ossification;

- lack of service innovation; and

- a fragmentation into "ManyNets" that could re-create a service-infrastructure innovation cycle.

- Incentives and "money flow"

- Can we escape from the advertising-driven Internet app ecosystem? Should we?

- Wide-area multicast (many-many) service

- Building block for building distributed applications?

- Inter-AS trust relationships

- Ossification of the Inter-AS interface – cannot be solved by a protocol!

- Impact ⇐ Applications ⇐ Business opportunities ($)

- What user problem cannot be solved today?

- "The core challenge of CS ... is a conceptual one, viz., what (abstract) mechanisms we can conceive without getting lost in the complexities of our own making." - Dijkstra

For his vision for networking in 30 years, Ken suggested that:

- IP addresses will still be in use

- but visible only at interfaces between different owners' infrastructures

- Network infrastructure might consist of access ASes + separate core networks operated by the "Big Five".

- Users might communicate via direct brain interfaces with AI systems.

Dirk Kutscher: Principled Approach to Network Programmability

I offered the perspective of introducing a principled approach to programmability that could provide better programmability (for humans and AI), based on more powerful network abstractions.

Previous work in SDN with protocols such as OpenFlow and dataplane programming languages such as P4 have only scratched the surface of what could be possible. OpenFlow was a great first idea, but it was fundamentally constrained by the IP and Ethernet-based abstractions that were built into it. It can be used for programming some applications in that domain, such as firewalls, virtual networking etc., but the idea of continuous innovation has not really materialized.

Similarly, P4 was advertized as an enabler for new levels of dataplane programmability, but even simple systems such as NetCache have to go to quite some extend to achieve minimal functionality for a proof-of-concept. Another P4 problem that is often reported is the hardware heterogeneity so that universal programmability is not really possible. In my opinion, this raises some questions with respect to applicability of current dataplane programming for in-network computing. A good example of a more productive application of P4 is the recent SIGCOMM paper on NetClone that describes as fast, scalable, and dynamic request cloning for microsecond-Scale RPCs. Here P4 is used as an accelerator for programming relatively simple functionality (protocol parsing, forwarding).

This may not be enough for future universal programmability though. During the panel discussion, I drew an analogy to computer programming language. We are not seeing the first programming language and IDEs that are designed from the ground up for better AI. What would that mean for network programmability? What abstractions and APIs would we need?

In my opinion, we would have to take a step back and think about the intended functionality and the required observability for future (automated) network programmability that is really protocol-independent. This would then entail more work on:

- the fundamental forwarding service (informed by hardware constraints);

- the telemetry approach;

- suitable protocol semantics;

- APIs for applications and management; and

- new network emulation & debugging approach (a long the lines of "network digital twin" concepts).

Overall, I am expecting new exiciting research in the direction of principled approaches to network programmability.

Jim Kurose: Open Research Infrastructures and Softwarization

Jim reminded us that the key reason Internet research flourished was the availability of open infrastructure with no incumbent providers initially. The infrastructure was owned by researchers, labs, and universities and allowed for a lot of experimentation.

This open infrastructure has recently been challenged by ossification with the rise of production ISP services at scale, and the emergence of closed ISPs, cellular carriers, hyperscalers operating large portion of the network.

As an example for emerging environments that offer interesting opportunities for experiments and new developments, Jim mentioned 4G/5G private networks, i.e., licensed spectrum created closed ecosystems – but open to researchers, creating opportunities for:

- innovation in private 5G networks such as Citizens Broadband Radio Service (CBRS) that could enables innovation in open, deployed systems and a democratization of 5G+ networks and edge applications;

- testbeds, such as Platforms for Advanced Wireless Research (PAWR); and

- the integration of WiFi, 5G as link-layer edge RANs.

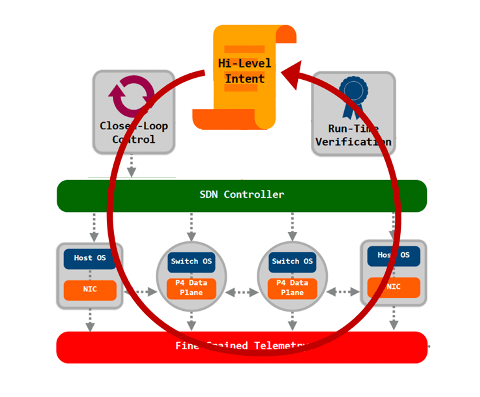

Jim was also suggesting further opportunities in softwarization and programmability, such as (formal) methods for logical correctness and configuration management, as well as programmability to add services beyond the "minimal viable service", such as closed loop automatic control and management.

Finally Jim also mentioned opportunities in emerging new networks such as LEOs, IoT and home networks.