Archive for the ‘Talks’ Category

Invited Talk at FNDC: Connecting AI: Inter-Networking Challenges for Distributed Machine Learning

I gave a talk at the Future Network Development Conference (FNDC) in Nanjing on August 20th, 2025. The title of the talk was Connecting AI: Inter-Networking Challenges for Distributed Machine Learning, and I talked about our recent work on PacTrain, NetSenseML, and some new work on in-network aggregation.

PacTrain is a novel framework that accelerates distributed training by combining pruning with sparse gradient compression. Active pruning of the neural network makes the model weights and gradients sparse. By ensuring the global knowledge of the gradient sparsity among all distributed training workers, we can perform lightweight compression communication without harming accuracy. We show that the PacTrain compression scheme achieves a near-optimal compression strategy while remaining compatible with the all- reduce primitive. Experimental evaluations show that PacTrain improves training throughput by 1.25 to 8.72× compared to state-of-the-art compression-enabled systems for representative vision and language models training tasks under bandwidth-constrained conditions.

NetSenseML is a novel network adaptive distributed deep learning framework that dynamically adjusts quantization, pruning, and compression strategies in response to real-time network conditions. By actively monitoring network conditions, NetSenseML applies gradient compression only when network congestion negatively impacts convergence speed, thus effectively balancing data payload reduction and model accuracy preservation. Our approach ensures efficient resource usage by adapting reduction techniques based on current network conditions, leading to shorter convergence times and improved training efficiency. Experimental evaluations show that NetSenseML can improve training throughput by a factor of 1.55x to 9.84x compared to state-of-the-art compression-enabled systems for representative DDL training jobs in bandwidth-constrained conditions.

Invited Talk at Airbus Workshop on Networking Systems

On October 10th, 2024, I was invited to give a talk at the 2nd Airbus Workshop on Networking Systems. The workshop largely discussed connected aircraft scenarios and technologies and features talks on security and reliability, IoT sensor fusioning, and future space and 6G network architectures.

My talk was on Connected Aircraft – Network Architectures and Technologies, and discussed relevant scenarios from my perspective, such as passenger services and new aircraft management applications. For the technology discussion, I focused on large-scale low-latency multimedia communication over the expected heterogeneous and dynamic aircraft connectivity networks and discussed current and emerging technologies such as Media over QUIC, ICN.

I also introduced the recently established Low-Altitude Systems and Economy Research Institute at HKUST(GZ), a cross-disciplinary research institute for the low-altitude domain (with similar but not identical requirements) and some of our recent projects such as Named Data Microverse.

Networked Systems for Distributed Machine Learning at Scale

On July 3rd, 2024, I gave a talk at the UCL/Huawei Joint Lab Workshop on "Building Better Protocols for Future Smart Networks" that took place on UCL's campus in London.

Talk Abstract

Large-scale distributed machine learning training networks are increasingly facing scaling problems with respect to FLOPS per deployed compute node. Communication bottlenecks can inhibit the effective utilization of expensive GPU resources. The root cause of these performance problems is not insufficient transmission speed or slow servers; it is the structure of the distributed computing and the communication characteristics it incurs. Large machine learning workloads typically provide relatively asymmetric, and sometimes centralized, communication structures, such as gradient aggregation and model update distribution. Even when training networks are less centralized, the amount of data that needs to be sent to aggregate several thousand input values through collective communication functions such as AllReduce can lead to Incast problems that overload network resources and servers. This talk discusses challenges and opportunities for developing in-network aggregation systems from a distributed computing and networked systems perspective.

Data-oriented, Decentralized, Daring: Opportunities and Research Challenges for an Information-Centric Web

Research and development in ICN has led to different communication patterns such as Sync and API implementations such as CNL. It is now time to think about how to leverage Information-Centric principles for providing better foundations for hypermedia applications in the future web. At NDNComm-2024 I talked about how ICN could possibly help, what could be fruitful future research directions, and why web3 and dweb are not the answer.

Material

AINTEC Panel on 6G Research

I had the pleasure of moderating a on panel 6G Research Challenges at AINTEC-2023. The panelists were Serge Fdida, Abhimanyu Gosain, Jim Kurose, and George Michaelson.

Opportunities and Challenges for Future Network Systems Design?

The panel was discussing opportunities and challenges for future network systems design and tried to shed some light on what 6G might actually mean and what interesting research could and should be done.

5G Hype vs Reality

While many people are speculating about possible 6G features, it is quite instructive to review the adoption of current 5G technology. The panel discussed this from different perspectives. It was noted that quite many advanced 5G features, although specified, are not yet available, such as new core designs, low latency communication, positioning, and network slicing.

There may be different reasons for that. One reason that was mentioned the lack of demand. 5G seems to be mostly used as a reasonably fast bitpipe, i.e., as an access technology for mobile broadband. Economically, this means that it is difficult to monetize the network beyond that.

The panel discussed whether WiFi and 5G will integrate as just two "localized" link-level wireless technologies at the Internet edge, or whether 5G will actually provide a global end-to-end network, interconnected to the Internet.

Centralization and new Deployment Models

Another interesting topic is the evolution of deployment models and the changing nature of service provider and infrastructure providers. Not only are hyperscalers providing most of the "over-the-top" functionality and infrastructure today, they are also increasingly providing the cloud infrastructure and telco software functions, such as Microsoft with their "Azure for Operators" platform. The panel also discussed the issues of commercial consolidation and concentration in this regard.

Key Enablers for 6G

We discussed potential key enables for 6G, and the following topics were mentioned:

- AI/ML Native Interface

- New Spectrum Technologies: 7-24 GHz, 300GHz-1THz

- Networking as a Sensor: Shift from Radio KPI to system and service focused

- Communication-Compute-Data Centric

- Zero Trust Architecture (ZTA): Security and Trust

- Open Radio Access Networks

With respect to "Communication-Compute-Data-Centricity", we discussed whether it would be the mobile network infrastructure that would provide features in this direction, e.g., a better integration of computing and networking, or whether the network would just provide the access service, and computing etc. would continue being an application (also see my invited talk on computing in the network at AINTEC-2023). The panel expressed some preference for maintaing a separation of concerns, layering and the end-to-end principle.

Another topic that was discussed was the continuing "softwarization" and the application of Software-Defined Networking (SDN) principles. Future systems may see some more management support for applications (and application-related infrastructure), and there is certainly a trend towards more autonomous management and the use of machine learning for that.

References

- Azure for operators

- Tim Wu; The Master Switch: The Rise and Fall of Information Empires; Columbia Law School; 2010

Computing in the Network – Lessons Learned and New Opportunities

The Internet is a distributed system that enables distributed computing applications, from client-server web applications to collaborative multi-media applications. The evolution of both compute server and network infrastructure platforms has fueled the development of new approaches for building more programmable networks and of application support functions in the network.

At the same time, new applications such as IoT data processing, distributed machine learning, decomposed application architectures such as Microservice and distributed computing frameworks introduce new opportunities for the development of more principled approaches towards Computing in the Network.

In my invited talk at AINTEC-2023, I reviewed some promising use cases, highlighted recent relevant research results and discussed several research challenges for conceiving Computing in the Network from an Internet perspective, for example discussing the meaning of "end-to-end communication" and "permissionless innovation" in the light of these new developments.

From "In-Network Computing"...

"In-Network Computing" is a popular but also relatively poorly defined term that comes up a lot in recent research studies. I discussed the different facets such as traditional networked computing, middlebox-like packet processing, active networking, programmable dataplane, Network Functions Virtualization and Service Function Chaning as depicted in the figure below.

In general, we can distinguish two main directions:

- Computing on the Network: general distributed computing using Internet technologies for communication, such as the Web and related overlay networks such as CDNs.

- Middlebox-like packet processing: intercepting, manipulating, generating, and steering packets has been applied to production networks in data centers and telco networks, often as a performance enhancing approach.

What about Programmable Data Plane?

Programmable Data Plane approaches such as the P4 programming language are often used to implement certain elements of either of these two categories, for example, traffic steering, load balancing etc. There are some point solutions for more application-layer-oriented functionalities such as NetCache, support for distributed consensus protocols, support for distributed machine learning training etc., but these tyically operate under very specific assumptions, and are often at odds with end-to-semantics and security. One example of a productive use of Programmable Data Plane in my opinion was the SIGCOMM-2023 paper on NetClone: Fast, Scalable, and Dynamic Request Cloning for Microsecond-Scale RPCs by Gyuyeong Kim. In this work, programmable switches were used to implemenent request forwarding strategies based on relatively simple packet meta information and observed performance, i.e., without requiring application layer knowledge.

... To "Computing in the Network"

There are many relevant use cases of distributed computing that can benefit from (and urgently need) support from networking and where distributing processing, aggregation etc. with awareness of network topologies, current utilization etc. would make a real difference. We have earlier built such a system and called it Compute-First Networking: Distributed Computing meets ICN (see https://dirk-kutscher.info/publications/distributed-computing-icn/ for background).

I talked about relevant applications such as distributed stream processing, and distributed machine learning. Today, these systems are typically run on the network but could definitely benefit from a better support and from better awareness of the network – so I asked the question whether there is the possibility for a confluence of existing and emerging capabilities of modern hardware and the requirements of relevant distributed computing applications.

Questions I raised included:

- How can we conceive such a confluence?

- How can we support distributed computing without giving up layering and principles such as the end-to-end principle?

- What features do we need from transport protocols to support diverse use cases?

Distributed Machine Learning

Distributed machine learning, e.g., federated learning, is an application that is currently perceived as a major driver for in-network computing. Large-scale training networks are expected to enable higher degrees of parallelization and handling of larger model sizes. How would we run such workloads as distributed systems, within data centers but potentially also across the Internet?

It is important to understand the performance requirements of such systems. Initial systems were build with bespoke High-Performance Computing (HPC) architectures and communication technologies such as Infiniband. Such systems used in-network aggregation functions and defined corresponding architectures such as SHArP.

Today's data center systems employ RDMA and RDMA over Ethernet (RoCE) as low-layer abstraction for efficient packet-based communication on layer 2, without addressing higher layer transport and system design aspects.

Collective Communications

In parallel computing architectures, Message Passing Interface (MPI) is typically used to provide efficient and portable inter-process communication for high-performance computing. One of the concepts developed in MPI is Collective Communication, a set of bespoke data aggregation and distribution patterns for different data-oriented distributed computing scenarios, such as:

- Broadcasting, e.g., for distributing configuration data or common ML models

- Scattering: single process involves a single process sending distinct pieces of data to each process

- Gathering: one process collecting and combining data pieces from other processes

- All-to-all communications: every process sends data to every other processes

- Reduction: collect data from all processes, aggregate and send result

Today's Collective Communication implementations are implementing these patterns for different underlaying networks and inter-process facilities. For GPU-based Collective Communications in today's networks, often a ring-based communication is applied, leading to quite some inefficiencies with respect to communication overhead and idle times of the different processors. See this presentation from Tencent at the recent AIDC side meeting at IETF-118. Other implementations use peer-to-peer communication models.

Collective Communication in the Network

From a networking perspective, the question is how to map collective communication better to Internet technology-based networked systems, avoiding unnessary duplication, providing typical transport protocol features such as reliability and congestion control, and enabling an optimal placement of corresponding aggregation functions.

This would incur a set of challenges such as

- Transport

- Reliability: underlying network lacks communication reliability

- Application data units instead of packets

- Blocking & non-blocking communication modes

- Security (potentially)

- Multi-destination delivery

- IP-Multicast possibly not the best fit

- Computing in the Network Framework

- Generic operations as primitives (at least per application domain)

- Stringent performance requirement

- Control, Optimizations, Management

- Topology and utilization awareness

- Scheduling communication and computation for optimal performance

We discussed these challenges in two recently submitted Internet Drafts on Transport for Collective Communications, and I discussed these issues in more detail during the talk.

Data-Oriented Collective Communications

I proposed the direction of data-oriented Collective Communication and discussed how concepts from Information-Centric distributed computing could possibly employed to achieve efficient and practical multi-destination transport, reliability and congestion control, and flexible placement of aggregation functions with a name-based identity scheme.

Promising features would include:

- Data-oriented communication model

- Locator-less model conducive to data production and consumption at different places in the network (computing)

- Multi-destination delivery included

- In-network retransmission and caching could help with reliability and performance

However, I also mentioned some challenges:

- Receiver-driven transport results in polling – efficient enough?

- RDMA-like communication unexplored

- Security concept: data-oriented security good – unclear whether it can be afforded

- Exact scheduling may be at odds with current ICN system design – more work needed

In summary, this seems to be rich field for future systems research. Distributed machine learning drives the development of new concepts for communication and computing. It clearly needs efficient multi-destination communication and an efficient mapping of MPI-inspired Collective Communication. The current abstractions do not fit well, and pure IP packet level communication is too limited. Connection-oriented transport seems to be at odds with the communication semantics, which makes data-oriented communication attractive. Such an approach could work with a name-based approach, i.e., without addresses, which is conducive to data production and consumption. Certainly, the challenging performance requirements call for more research and possibly evolution of current ICN protocols.

References

- [CFN-ICN] Compute-First Networking: Distributed Computing meets ICN

- [DISTCOMPICN] Distributed Computing in ICN

- [IETFCollectiveCommunications] Collective Communication: Better Network Abstractions for AI

- [IETF118AIDC] Side meeting at IETF-118 on AI in Data Centers

- [IETF118CC] Side meeting at IETF-118 on Collective Communications

- [NETCLONE] NetClone: Fast, Scalable, and Dynamic Request Cloning for Microsecond-Scale RPCs

- [RoCE] RDMA over Ethernet (RoCE)

- [SHARP] Richard L. Graham, Devendar Bureddy, Pak Lui, Hal Rosenstock, Gilad Shainer, Gil Bloch, Dror Goldenerg, Mike Dubman, Sasha Kotchubievsky, Vladimir Koushnir, Lion Levi, Alex Margolin, Tamir Ronen, Alexander Shpiner, Oded Wertheim, and Eitan Zahavi. 2016. Scalable hierarchical aggregation protocol (SHArP): a hardware architecture for efficient data reduction. In Proceedings of the First Workshop on Optimization of Communication in HPC (COM-HPC '16). IEEE Press, 1–10.

Network Abstractions for Continuous Innovation

In a joint panel at ACM ICN-2023 and IEEE ICNP-2023 in Reykjavik, Ken Calvert, Jim Kurose, Lixia Zhang, and myself discussed future network abstractions. The panel was moderated by Dave Oran. This was one of the more interesting and interactive panel sessions I participated in, so I am providing a summary here.

Since the Internet's initial rollout ~40 years ago, not only its global connectivity has brought fundamental changes to society and daily life, but its protocol suite and implementations have also gone through many iterations of changes, with SDN, NFV, and programmability among other changes over the last decade. This panel looks into next decade of network research by asking a set of questions regarding where lies the future direction to enable continued innovations.

Opportunities and Challenges for Future Network Innovations

Lixia Zhang: Rethinking Internet Architecture Fundamentals

Lixia Zhang (UCLA), quoting Einstein, said that the formulation of the problem is often more essential than the solution and pointed at the complexities of today's protocols stacks that are apparently needed to achieve desired functionality. For example, Lixia mentioned RFC 9298 on proxying UDP in HTTP, specifically on tunneling UDP to a server acting as a UDP-specific proxy over HTTP. UDP over IP was once conceived as a minial message-oriented communication service that was intended for DNS and interactive real-time communication. Due to its push-based communication model, it can be used with minimal effort for useful but also harmful application, including large-scale DDOS attacks. Proxing UDP over HTTP addresses this and other concerns, by providing a secure channel to a server in a web context, so that the server can authorize tunnel endpoints, and so that the UDP communication is congestion controlled by the underlying transport protocol (TCP or QUIC). This specification can be seen as a work-around: sending unsolicted (and un-authenticated) messages over the Internet is a major problem in today's Internet. There is no general approach for authenticating such messages and no concept for trust in peer identities. Instead of analyzing the root cause of such problems, the Internet communities (and the dominant players in that space) prefer to come up with (highly inefficient) workarounds.

This problem was discussed more generally by Oliver Spatscheck of AT&T Labs in his 2013 article titled Layers of Success, where he discussed the (actually deployed) excessive layering in production networks, for example mobile communication networks, where regular Internet traffic is routinely tunneled over GTP/UDP/IP/MPLS:

The main issue with layering is that layers hide information from each other. We could see this as a benefit, because it reduces the complexities involved in adding more layers, thus reducing the cost of introducing more services. However, hiding information can lead to complex and dynamic layer interactions that hamper the end-to-end system’s reliability and are extremely difficult if not impossible to debug and operate. So, much of the savings achieved when introducing new services is being spent operating them reliably.

According to Lixia, the excessive layering stems from more fundamental problems with today's network architecture, notably the lack of identity and trust in the core Internet protocols and the lack of functionality in the forwarding system – leading to significant problems today as exemplied by recent DDoS attacks. Quoting Einstein again, she said that we cannot solve problems by using the same kind of thinking we used when we created them, calling for a more fundamental redesign based on information-centric networking principles.

Ken Calvert: Domain-specific Networking

Ken Calvert (University of Kentucky) provided a retrospective of networking research and looked at selected papers published at the first IEEE ICNP conference in 1993. According to Ken, the dominant theme at that time was How to design, build, and analyze protocols, for example as discussed in his 1993 ICNP paper titled Beyond layering: modularity considerations for protocol architectures.

Ken offered a set of challenges and opportunities for future networking research, such as:

- Domain-specific networking à la Ex uno pluria, a 2018 CCR editorial discussing:

- infrastructure ossification;

- lack of service innovation; and

- a fragmentation into "ManyNets" that could re-create a service-infrastructure innovation cycle.

- Incentives and "money flow"

- Can we escape from the advertising-driven Internet app ecosystem? Should we?

- Wide-area multicast (many-many) service

- Building block for building distributed applications?

- Inter-AS trust relationships

- Ossification of the Inter-AS interface – cannot be solved by a protocol!

- Impact ⇐ Applications ⇐ Business opportunities ($)

- What user problem cannot be solved today?

- "The core challenge of CS ... is a conceptual one, viz., what (abstract) mechanisms we can conceive without getting lost in the complexities of our own making." - Dijkstra

For his vision for networking in 30 years, Ken suggested that:

- IP addresses will still be in use

- but visible only at interfaces between different owners' infrastructures

- Network infrastructure might consist of access ASes + separate core networks operated by the "Big Five".

- Users might communicate via direct brain interfaces with AI systems.

Dirk Kutscher: Principled Approach to Network Programmability

I offered the perspective of introducing a principled approach to programmability that could provide better programmability (for humans and AI), based on more powerful network abstractions.

Previous work in SDN with protocols such as OpenFlow and dataplane programming languages such as P4 have only scratched the surface of what could be possible. OpenFlow was a great first idea, but it was fundamentally constrained by the IP and Ethernet-based abstractions that were built into it. It can be used for programming some applications in that domain, such as firewalls, virtual networking etc., but the idea of continuous innovation has not really materialized.

Similarly, P4 was advertized as an enabler for new levels of dataplane programmability, but even simple systems such as NetCache have to go to quite some extend to achieve minimal functionality for a proof-of-concept. Another P4 problem that is often reported is the hardware heterogeneity so that universal programmability is not really possible. In my opinion, this raises some questions with respect to applicability of current dataplane programming for in-network computing. A good example of a more productive application of P4 is the recent SIGCOMM paper on NetClone that describes as fast, scalable, and dynamic request cloning for microsecond-Scale RPCs. Here P4 is used as an accelerator for programming relatively simple functionality (protocol parsing, forwarding).

This may not be enough for future universal programmability though. During the panel discussion, I drew an analogy to computer programming language. We are not seeing the first programming language and IDEs that are designed from the ground up for better AI. What would that mean for network programmability? What abstractions and APIs would we need?

In my opinion, we would have to take a step back and think about the intended functionality and the required observability for future (automated) network programmability that is really protocol-independent. This would then entail more work on:

- the fundamental forwarding service (informed by hardware constraints);

- the telemetry approach;

- suitable protocol semantics;

- APIs for applications and management; and

- new network emulation & debugging approach (a long the lines of "network digital twin" concepts).

Overall, I am expecting new exiciting research in the direction of principled approaches to network programmability.

Jim Kurose: Open Research Infrastructures and Softwarization

Jim reminded us that the key reason Internet research flourished was the availability of open infrastructure with no incumbent providers initially. The infrastructure was owned by researchers, labs, and universities and allowed for a lot of experimentation.

This open infrastructure has recently been challenged by ossification with the rise of production ISP services at scale, and the emergence of closed ISPs, cellular carriers, hyperscalers operating large portion of the network.

As an example for emerging environments that offer interesting opportunities for experiments and new developments, Jim mentioned 4G/5G private networks, i.e., licensed spectrum created closed ecosystems – but open to researchers, creating opportunities for:

- innovation in private 5G networks such as Citizens Broadband Radio Service (CBRS) that could enables innovation in open, deployed systems and a democratization of 5G+ networks and edge applications;

- testbeds, such as Platforms for Advanced Wireless Research (PAWR); and

- the integration of WiFi, 5G as link-layer edge RANs.

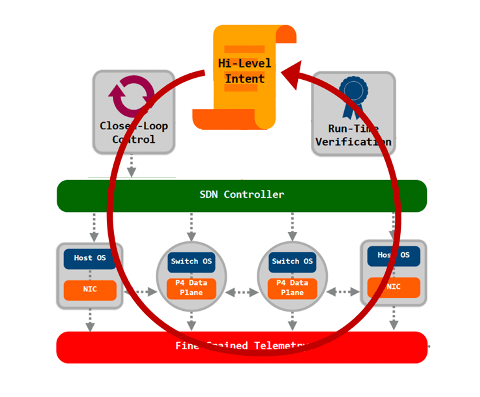

Jim was also suggesting further opportunities in softwarization and programmability, such as (formal) methods for logical correctness and configuration management, as well as programmability to add services beyond the "minimal viable service", such as closed loop automatic control and management.

Finally Jim also mentioned opportunities in emerging new networks such as LEOs, IoT and home networks.

Connecting the Metaverse: In-Network Computing as Infrastructure

Ubiquitous virtual reality environments such as Metaverse have been described as the future mobile Internet, alluding to their expected profound impact on the way how information is retrieved, processed, rendered, and consumed. While detailed designs are still emerging, early visions such Keeichi Matsuda’s Hyper-Reality project have already outlined usage models and expectations on connectivity and data availability to enable rich interactions with the physical world and blending it with dynamically computed artefacts.

Metaverse systems will challenge traditional client-server-inspired web models, centralized security trust anchors and server-style distributed computing. The new network will be based on dynamic interactions between humans, the phyiscal world, and computing processes in an edge-to-cloud continuum. This talk will outline the associated challenges, review recent work in distributed computing and suggest some approaches for evolving networking and computing to enable Metaverse – not as a dystopian vision but as an opportunity for societies and their citizens.

Re-Thinking LoRaWAN

Low-power, long-range radio systems such as LoRaWAN represent one of the few remaining networked system domains that still feature a complete vertical stack with special link- and network layer designs independent of IP. Similar to local IoT systems for low-power networks (LoWPANs), the main service of these systems is to make data available at minimal energy consumption, but over longer distances. LoRaWAN (the system that comprises the LoRa PHY and MAC) supports bi-directional communication, if the IoT device has the energy budget. Application developers interface with the system using a centralized server that terminates the LoRaWAN protocol and makes data available on the Internet.

While LoRaWAN applications are typically providing access to named data, the existing LoRaWAN stack does not support this way of communicating. LoRaWAN is device-centric and is generally designed as a device-to-server messaging system – with centralized servers that serve as rendezvous point for accessing sensor data. The current design imposes rigid constraints and does not facilitate accessing named data natively, which results in many point solutions and dependencies on central server instances.

In our demo paper & presentation at ACM ICN-2020, we are therefore describing how Information-Centric Networking could provide a more natural communication style for LoRa applications and how ICN could help to conceive LoRa networks in a more distributed fashion compared to todays mainstream LoRaWAN deployments. For LoWPANs (e.g., 802.15.4 networks), ICN has already demonstrated to be an attractive and viable alternative to legacy integrated special purpose stacks – we believe that

LoRa communication provides similar opportunities.

Watch my Peter Kietzmann's talk about it here:

Managing Radio Networks in an Encrypted World

I attended last week's IAB/GSMA Workshop on Managing Radio Networks in an Encrypted World (MaRNEW).

The motivation for this workshop was the increasing trend of applying transport layer end-to-end encryption in major web applications such as Google services, YouTube, Netflix, Facebook and others. This trend will likely increase due to further deployment of HTTP/2 for which client implementations today try to setup TLS connections per default.

In mobile networks, traffic management but also additional services/functions have traditionally relied on being able to leverage knowledge about application type, application specifics. Example for such functions include policing/prioritization, optimized scheduling, caching, filtering, but also tracking, ad-insertion etc. In addition to functions that operators want to apply, there are also regulation requirements (depending on local legislation) for filtering, legal intercepting etc. that would become more difficult in the presence of ubiquitous encryption.

At the MaRNEW workshop, leading experts from network operators, vendors, application service providers, CDN providers and academic institutions discussed the impact of ubiquitous encryption as well as ideas for enabling an effective collaboration between the network, applications and users to enable optimal performance and resource efficiency.

In particular, the workshop addressed the following topics:

- Understanding the bandwidth optimization use cases particular to radio networks;

- Understanding existing approaches and how these do not work with encrypted traffic;

- Understanding reasons why the Internet has not standardised support for legal interception and why mobile networks have;

- Determining how to match traffic types with bandwidth optimization methods;

- Discussing minimal information to be shared to manage networks but ensure user security and privacy;

- Developing new bandwidth optimization techniques and protocols within these new constraints;

- Discussing the appropriate network layer(s) for each management function; and

- Cooperative methods of bandwidth optimization and issues associated with these.

Encryption: Technological and Business Aspects

It is not a secret that there are different aspects for discussing end-to-end encryption in public networks. Obviously, encryption helps with user privacy, and with the background of recent and current revelations of privacy breaches through pervasive monitoring, it has become common agreement that more (easily deployable) encryption would be useful to overcome this.

There is however also the business perspective: the Internet and specifically the eco system of mobile communication and service provision has multiple stake holders, each of those with their particular interests: network operators want to provide a useful service, in an economical way and may have an interest to enhance the overall service quality through various technical measures. Application service providers want their particular service to perform well over a range of different networks. Network equipment vendors have their product roadmaps and network architecture preferences etc.

Finally, there are the actual users of the system who have an interest in good quality of experience, cost-efficiency -- and privacy. Privacy is not only a concern with respect to (illegal) pervasive monitoring by agencies, but also with respect to maintaining anonymity and confidentiality towards network and service providers. For many applications, user profiles, user-generated data etc. is also a key business asset -- so there is a strong interest by different players to either get access to that data -- or (depending on the nature of a player) to keep other players from accessing it -- through encryption.

The MaRNEW workshop focused on the technological discussion.

Impact of Encryption

During the discussion the following main impacts of ubiquitous encryption on mobile network were identified:

- Traditional ways of identifying and classifying network traffic (DPI) become more costly and potentially infeasible.

- Traditional traffic management systems have relied on such classification, for different purpose: optimizing resource usage in access networks according to operator policies, forwarding of traffic through optimizers, caches etc., as well as filtering. Those approaches and the actual requirements behind them need to be revisited.

- Content and service provisioning in both mobile and fixed networks today is heavily relying on CDN and in-network application functions. In addition, new approaches such as Mobile Edge Computing may shift more of such functions to access networks. The motivation is to provide better performance and cost efficiency through offloading networks (CDN cache hits) and through reducing latency and transport protocol performance (local control loops, reduced RTT to caches). Introducing more and more end-to-end encryption makes it impossible for operators to provide any application (or CDN-provider)-independent optimization functions. The alternative of running individual instances for each individual CDN provider does not seem promising. It could also be a major road block for future network and application innovation -- because each of those individual functions might require upgrading to introduce in-network support for it.

Way Forward

(Copyright 2015 NEC)

At the workshop, different solutions were discussed.

- First, it was agreed that the actual impact needs to be understood better and ought to be quantified. For example, assuming that some knowledge about application types (or corresponding service quality expectations) could be leveraged by base stations for more efficient transmission scheduling (e.g., by delaying packets of non-latency-sensitive flows or by operating multiple queues for different flow types), networks should at least be able to obtain corresponding hints from senders. However, the actual impact and potential benefits have to be demonstrated. Operators will work on that issue.

- The (Internet) transport protocol community has made significant progress in recent years on several fronts: Active Queue Management (AQM) such as fq_codel and PIE have been demonstrated to be able to improve load balancing and reduce latency in router queues. Moreover, transport protocol research has led to promising results (for example PCC -- Performance-oriented Congestion Control). It was suggested that those mechanisms should be implemented and deployed where possible.

- Several options for Cooperative Traffic Management have been discussed. For example this could included exchanging certain information between the network and senders/receivers. The network could inform endpoints better about congestion and non-congestion-induced problems (for example in an extended ECN fashion), or endpoints could inform the network about relevant meta information (application type, QoS requirements etc.). The latter could leverage existing technologies such as DiffServ. Potentially, it would be sufficient to distinguish delay-sensitive flows (e.g., for interactive real-time) and delay-tolerant flows (file download etc.). One interesting question is how endpoints would be incentivized to use such signaling correctly and how corresponding APIs would look like.

- Overcoming the general limitations of connection-based security and its tendency to require application-specific (or CDN-provider-specific) in-network functions could require a more fundamental rethinking of network architecture and protocol layering. For example, Information-Centric Networking (ICN) would leverage object-security (authentication, encryption), hence enabling the network to implement functions such as caching, local transport strategies etc. in an application manner. This could be of particular relevance for 5G networks where a higher level of dynamicity in the creation and deployment of new OTT services are expected.

For the discussion of such solutions, I (together with several colleagues) have made two contributions to the workshop: 1) Enabling Traffic Management without DPI, and 2) Maintaining Efficiency and Privacy in Mobile Networks through Information-Centric Networking.

Enabling Traffic Management without DPI

- Mirja Kühlewind, Dirk Kutscher, Brian Trammell; Enabling Traffic Management without DPI; IAB/GSMA Workshop on Managing Radio Networks in an Encrypted World (MaRNEW); September 2015

Is DPI really needed for traffic management in mobile networks? Our position is “no”. Traffic management is usually realized through relatively simple mechanisms like rate shaping, prioritization, and dropping packets. Compared to these mechanisms, the semantics of applications that can be exposed through DPI are much richer; traffic classification anyway maps these semantics down to a simple set of categories.

The question then arises whether operators are really helped by brittle, insecure and expensive mechanisms for gaining higher fidelity information for the coarse traffic information for traffic management, or whether simple signaling would suffice for traffic classification for mobile network management purposes.

Obviously, when relying on endpoints to signal information about the underlying application which may be used to change the network’s treatment of that application’s traffic, questions of trust arise: how can the network be sure the endpoints are being honest, and prevent endpoints from gaming the system to their advantage (and the disadvantage of others); can these signaling approaches be used as an attack vector. Here the approach is to define the vocabulary of the signaling protocol to properly incentivize honest cooperation, while allowing the network to verify this cooperation.

We discuss two application-independent approaches for traffic management that are based on network-compatible metrics: ConEx Policing and low latency support with SPUD.

Congestion Exposure (ConEx) is a mechanism that enables senders to inform the network about previously encountered congestion in flows thus enabling senders and network infrastructure to respond to congestion based on operator policies. This information is provided in the IP header and can still be accessed even if the payload is encrypted. ConEx information is auditable by comparing the congestion level at network egress to the ConEx signal which incentivizes the sender to state its congestion contribution correctly.

Using ConEx would allow for a bulk packet traffic management system that does not have to consider application classes. Instead, with ConEx accurate downstream path information on incipient congestion are visible to ingress network operators. This information can be used to base traffic management on the actual current cost (which is the contribution to congestion of each flow) and enable operators to apply congestion-based policing/accounting depending on their preference and independent of application characteristics. Such traffic management would be simpler, more robust (no real-time flow application type identification required, no static configuration of application classes) and provide better performance as decisions can be taken based on the real actual cost contribution at each point in time.

The Substrate Protocol for User Datagrams (SPUD) is a new approach to selective information exposure designed to support transport evolution. SPUD is realized as a shim between UDP and an (encrypted) transport protocol. The basic SPUD protocol provides minimal sub-transport functionality by grouping of packets together into tubes and signaling of the start and end of a tube.

This will assist middleboxes in state setup and teardown along the path. Further, SPUD provides an extensible signaling mechanism based on a type-value encoding for associating properties with individual packets or all packets in a tube. The SPUD protocol can be used to signal low latency requirements from an endpoint to the network, or expose the existence of support for such services from the network to the endpoint. Therefore we propose to provide four SPUD signals: a latency sensitivity flag, a signal to yield to another tube, an application preference for a maximum single queue delay, and a facility to discover the maximum possible single queue length along the path.

Based on the latency-sensitivity flag a network operator can implement an additional service (as compared to today’s best effort service) that uses smaller queues and/or different AQM parameters without changing the service that is provided today. Signaling of lower queue priority or maximum single hop delay can further be used to preferentially drop packets of the same sender or within one flow. Information about expected queuing delays on the path can be used for buffer configuration at the endpoints.

The proposal is not intended as a blueprint for immediate implementation -- but it demonstrates how cooperative traffic management could be implemented. In our view, cooperative traffic management requires a solid understanding of the interactions with transport layer and the corresponding performance impacts/improvements.

Maintaining Efficiency and Privacy in Mobile Networks through Information-Centric Networking

- Dirk Kutscher, Giovanna Carofiglio, Luca Muscariello, Paul Polakos; Maintaining Efficiency and Privacy in Mobile Networks through Information-Centric Networking; IAB/GSMA Workshop on Managing Radio Networks in an Encrypted World (MaRNEW); September 2015

We present a solution to overcome the impasse of deploying confidentiality at the cost of breaking most of current network traffic engineering in mobile networks. Our proposition is based on Information-Centric Networking (ICN) which is a data-centric network architecture that gracefully incorporates security and traffic optimization.

Content-based security instead of connection based is the foundation of the Information-Centric Networking (ICN) architecture. In ICN, we provide a network service that directly implements the desired information-access abstraction. The network forwards requests for named data and corresponding responses containing the data. The name can be cryptographically bound to the data for ascertaining authenticity. This enables the network to replicate data objects in arbitrary locations, thus enabling ubiquitous caching. Object data can also be encrypted for user privacy, leaving other network-relevant information such as the name intact – thus maintaining options for traffic management, policing etc. The performance gains of having ICN in the mobile backhaul have been evaluated experimentally (see paper). ICN incorporates these ideas into a novel network layer providing all of the mentioned objectives without using man-in-the-middle like solutions.

ICN secures data itself by requiring producers to cryptographically sign every data packet: the signature constitutes the integrity meta-data. The data is uniquely identified by a name that is bound to the data via the signature. The producer’s public key to implement signature verification can be obtained by using the KeyLocator field which can be the name of the data containing the key of the producer. Authentication is implemented via the producer’s key that makes use of a trust model, e.g. PKI, Web-of-Trust that can be extended using key chaining to delegate trust to different sub-namespaces (for hierarchical naming). Confidentiality is obtained by encryption of the data payload using the producer’s key. Notice that authenticity, integrity and confidentiality are independent features.

Once data is published by the producer it can be stored in any location without affecting the security properties of the data which are location independent. Inter-networking of encrypted data is included by design in ICN and in-network caching is always possible with or without confidentiality. Authenticity might not be necessary in many cases so the authentication of the identity of the producer is optional. It is not mandatory either to verify the integrity of the data by verification of the signature. It is important to remark that ICN disantangles authenticity, privacy and integrity so that they can be handled in different ways and without the interaction of end-hosts.

TLS provides web security by encrypting a layer 4 connection between two hosts. Authenticity is provided by the web of trust (certification authorities and a public key infrastructure) to authenticate the web server and symmetric cypher on the two end points based on a negotiated key. In presence of TLS many networking operations become unfeasible: filtering, caching, acceleration, trans-coding.

ICN takes a radically different approach to guarantee confidentiality, authenticity and integrity by embedding them into a redefined network layer. Indeed, ICN builds on the abstraction of data requested, accessed, cached and forwarded by name: the network forwards requests coming from the consumer for named data and routes back data packets on the identical reverse path (symmetric routing).

The ICN communication model allows network nodes between a web server and a web client to operate as forwarding and storage functions to implement various inter-networking functionalities like caching or load balancing without relaxing any security feature. As a fully fledged data-centric network architecture, ICN incorporates mobility, storage, security and multi-point communication by design.