Archive for the ‘ACM ICN’ tag

Network Abstractions for Continuous Innovation

In a joint panel at ACM ICN-2023 and IEEE ICNP-2023 in Reykjavik, Ken Calvert, Jim Kurose, Lixia Zhang, and myself discussed future network abstractions. The panel was moderated by Dave Oran. This was one of the more interesting and interactive panel sessions I participated in, so I am providing a summary here.

Since the Internet's initial rollout ~40 years ago, not only its global connectivity has brought fundamental changes to society and daily life, but its protocol suite and implementations have also gone through many iterations of changes, with SDN, NFV, and programmability among other changes over the last decade. This panel looks into next decade of network research by asking a set of questions regarding where lies the future direction to enable continued innovations.

Opportunities and Challenges for Future Network Innovations

Lixia Zhang: Rethinking Internet Architecture Fundamentals

Lixia Zhang (UCLA), quoting Einstein, said that the formulation of the problem is often more essential than the solution and pointed at the complexities of today's protocols stacks that are apparently needed to achieve desired functionality. For example, Lixia mentioned RFC 9298 on proxying UDP in HTTP, specifically on tunneling UDP to a server acting as a UDP-specific proxy over HTTP. UDP over IP was once conceived as a minial message-oriented communication service that was intended for DNS and interactive real-time communication. Due to its push-based communication model, it can be used with minimal effort for useful but also harmful application, including large-scale DDOS attacks. Proxing UDP over HTTP addresses this and other concerns, by providing a secure channel to a server in a web context, so that the server can authorize tunnel endpoints, and so that the UDP communication is congestion controlled by the underlying transport protocol (TCP or QUIC). This specification can be seen as a work-around: sending unsolicted (and un-authenticated) messages over the Internet is a major problem in today's Internet. There is no general approach for authenticating such messages and no concept for trust in peer identities. Instead of analyzing the root cause of such problems, the Internet communities (and the dominant players in that space) prefer to come up with (highly inefficient) workarounds.

This problem was discussed more generally by Oliver Spatscheck of AT&T Labs in his 2013 article titled Layers of Success, where he discussed the (actually deployed) excessive layering in production networks, for example mobile communication networks, where regular Internet traffic is routinely tunneled over GTP/UDP/IP/MPLS:

The main issue with layering is that layers hide information from each other. We could see this as a benefit, because it reduces the complexities involved in adding more layers, thus reducing the cost of introducing more services. However, hiding information can lead to complex and dynamic layer interactions that hamper the end-to-end system’s reliability and are extremely difficult if not impossible to debug and operate. So, much of the savings achieved when introducing new services is being spent operating them reliably.

According to Lixia, the excessive layering stems from more fundamental problems with today's network architecture, notably the lack of identity and trust in the core Internet protocols and the lack of functionality in the forwarding system – leading to significant problems today as exemplied by recent DDoS attacks. Quoting Einstein again, she said that we cannot solve problems by using the same kind of thinking we used when we created them, calling for a more fundamental redesign based on information-centric networking principles.

Ken Calvert: Domain-specific Networking

Ken Calvert (University of Kentucky) provided a retrospective of networking research and looked at selected papers published at the first IEEE ICNP conference in 1993. According to Ken, the dominant theme at that time was How to design, build, and analyze protocols, for example as discussed in his 1993 ICNP paper titled Beyond layering: modularity considerations for protocol architectures.

Ken offered a set of challenges and opportunities for future networking research, such as:

- Domain-specific networking à la Ex uno pluria, a 2018 CCR editorial discussing:

- infrastructure ossification;

- lack of service innovation; and

- a fragmentation into "ManyNets" that could re-create a service-infrastructure innovation cycle.

- Incentives and "money flow"

- Can we escape from the advertising-driven Internet app ecosystem? Should we?

- Wide-area multicast (many-many) service

- Building block for building distributed applications?

- Inter-AS trust relationships

- Ossification of the Inter-AS interface – cannot be solved by a protocol!

- Impact ⇐ Applications ⇐ Business opportunities ($)

- What user problem cannot be solved today?

- "The core challenge of CS ... is a conceptual one, viz., what (abstract) mechanisms we can conceive without getting lost in the complexities of our own making." - Dijkstra

For his vision for networking in 30 years, Ken suggested that:

- IP addresses will still be in use

- but visible only at interfaces between different owners' infrastructures

- Network infrastructure might consist of access ASes + separate core networks operated by the "Big Five".

- Users might communicate via direct brain interfaces with AI systems.

Dirk Kutscher: Principled Approach to Network Programmability

I offered the perspective of introducing a principled approach to programmability that could provide better programmability (for humans and AI), based on more powerful network abstractions.

Previous work in SDN with protocols such as OpenFlow and dataplane programming languages such as P4 have only scratched the surface of what could be possible. OpenFlow was a great first idea, but it was fundamentally constrained by the IP and Ethernet-based abstractions that were built into it. It can be used for programming some applications in that domain, such as firewalls, virtual networking etc., but the idea of continuous innovation has not really materialized.

Similarly, P4 was advertized as an enabler for new levels of dataplane programmability, but even simple systems such as NetCache have to go to quite some extend to achieve minimal functionality for a proof-of-concept. Another P4 problem that is often reported is the hardware heterogeneity so that universal programmability is not really possible. In my opinion, this raises some questions with respect to applicability of current dataplane programming for in-network computing. A good example of a more productive application of P4 is the recent SIGCOMM paper on NetClone that describes as fast, scalable, and dynamic request cloning for microsecond-Scale RPCs. Here P4 is used as an accelerator for programming relatively simple functionality (protocol parsing, forwarding).

This may not be enough for future universal programmability though. During the panel discussion, I drew an analogy to computer programming language. We are not seeing the first programming language and IDEs that are designed from the ground up for better AI. What would that mean for network programmability? What abstractions and APIs would we need?

In my opinion, we would have to take a step back and think about the intended functionality and the required observability for future (automated) network programmability that is really protocol-independent. This would then entail more work on:

- the fundamental forwarding service (informed by hardware constraints);

- the telemetry approach;

- suitable protocol semantics;

- APIs for applications and management; and

- new network emulation & debugging approach (a long the lines of "network digital twin" concepts).

Overall, I am expecting new exiciting research in the direction of principled approaches to network programmability.

Jim Kurose: Open Research Infrastructures and Softwarization

Jim reminded us that the key reason Internet research flourished was the availability of open infrastructure with no incumbent providers initially. The infrastructure was owned by researchers, labs, and universities and allowed for a lot of experimentation.

This open infrastructure has recently been challenged by ossification with the rise of production ISP services at scale, and the emergence of closed ISPs, cellular carriers, hyperscalers operating large portion of the network.

As an example for emerging environments that offer interesting opportunities for experiments and new developments, Jim mentioned 4G/5G private networks, i.e., licensed spectrum created closed ecosystems – but open to researchers, creating opportunities for:

- innovation in private 5G networks such as Citizens Broadband Radio Service (CBRS) that could enables innovation in open, deployed systems and a democratization of 5G+ networks and edge applications;

- testbeds, such as Platforms for Advanced Wireless Research (PAWR); and

- the integration of WiFi, 5G as link-layer edge RANs.

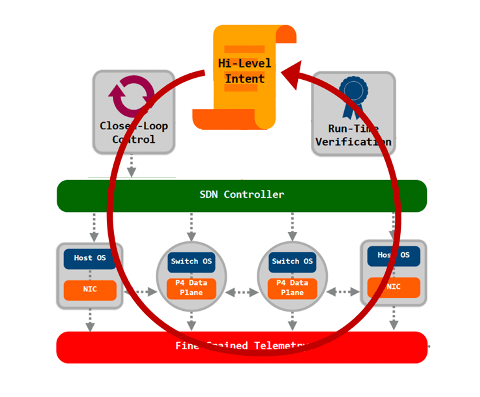

Jim was also suggesting further opportunities in softwarization and programmability, such as (formal) methods for logical correctness and configuration management, as well as programmability to add services beyond the "minimal viable service", such as closed loop automatic control and management.

Finally Jim also mentioned opportunities in emerging new networks such as LEOs, IoT and home networks.