Archive for the ‘SDN’ tag

Network Abstractions for Continuous Innovation

In a joint panel at ACM ICN-2023 and IEEE ICNP-2023 in Reykjavik, Ken Calvert, Jim Kurose, Lixia Zhang, and myself discussed future network abstractions. The panel was moderated by Dave Oran. This was one of the more interesting and interactive panel sessions I participated in, so I am providing a summary here.

Since the Internet's initial rollout ~40 years ago, not only its global connectivity has brought fundamental changes to society and daily life, but its protocol suite and implementations have also gone through many iterations of changes, with SDN, NFV, and programmability among other changes over the last decade. This panel looks into next decade of network research by asking a set of questions regarding where lies the future direction to enable continued innovations.

Opportunities and Challenges for Future Network Innovations

Lixia Zhang: Rethinking Internet Architecture Fundamentals

Lixia Zhang (UCLA), quoting Einstein, said that the formulation of the problem is often more essential than the solution and pointed at the complexities of today's protocols stacks that are apparently needed to achieve desired functionality. For example, Lixia mentioned RFC 9298 on proxying UDP in HTTP, specifically on tunneling UDP to a server acting as a UDP-specific proxy over HTTP. UDP over IP was once conceived as a minial message-oriented communication service that was intended for DNS and interactive real-time communication. Due to its push-based communication model, it can be used with minimal effort for useful but also harmful application, including large-scale DDOS attacks. Proxing UDP over HTTP addresses this and other concerns, by providing a secure channel to a server in a web context, so that the server can authorize tunnel endpoints, and so that the UDP communication is congestion controlled by the underlying transport protocol (TCP or QUIC). This specification can be seen as a work-around: sending unsolicted (and un-authenticated) messages over the Internet is a major problem in today's Internet. There is no general approach for authenticating such messages and no concept for trust in peer identities. Instead of analyzing the root cause of such problems, the Internet communities (and the dominant players in that space) prefer to come up with (highly inefficient) workarounds.

This problem was discussed more generally by Oliver Spatscheck of AT&T Labs in his 2013 article titled Layers of Success, where he discussed the (actually deployed) excessive layering in production networks, for example mobile communication networks, where regular Internet traffic is routinely tunneled over GTP/UDP/IP/MPLS:

The main issue with layering is that layers hide information from each other. We could see this as a benefit, because it reduces the complexities involved in adding more layers, thus reducing the cost of introducing more services. However, hiding information can lead to complex and dynamic layer interactions that hamper the end-to-end system’s reliability and are extremely difficult if not impossible to debug and operate. So, much of the savings achieved when introducing new services is being spent operating them reliably.

According to Lixia, the excessive layering stems from more fundamental problems with today's network architecture, notably the lack of identity and trust in the core Internet protocols and the lack of functionality in the forwarding system – leading to significant problems today as exemplied by recent DDoS attacks. Quoting Einstein again, she said that we cannot solve problems by using the same kind of thinking we used when we created them, calling for a more fundamental redesign based on information-centric networking principles.

Ken Calvert: Domain-specific Networking

Ken Calvert (University of Kentucky) provided a retrospective of networking research and looked at selected papers published at the first IEEE ICNP conference in 1993. According to Ken, the dominant theme at that time was How to design, build, and analyze protocols, for example as discussed in his 1993 ICNP paper titled Beyond layering: modularity considerations for protocol architectures.

Ken offered a set of challenges and opportunities for future networking research, such as:

- Domain-specific networking à la Ex uno pluria, a 2018 CCR editorial discussing:

- infrastructure ossification;

- lack of service innovation; and

- a fragmentation into "ManyNets" that could re-create a service-infrastructure innovation cycle.

- Incentives and "money flow"

- Can we escape from the advertising-driven Internet app ecosystem? Should we?

- Wide-area multicast (many-many) service

- Building block for building distributed applications?

- Inter-AS trust relationships

- Ossification of the Inter-AS interface – cannot be solved by a protocol!

- Impact ⇐ Applications ⇐ Business opportunities ($)

- What user problem cannot be solved today?

- "The core challenge of CS ... is a conceptual one, viz., what (abstract) mechanisms we can conceive without getting lost in the complexities of our own making." - Dijkstra

For his vision for networking in 30 years, Ken suggested that:

- IP addresses will still be in use

- but visible only at interfaces between different owners' infrastructures

- Network infrastructure might consist of access ASes + separate core networks operated by the "Big Five".

- Users might communicate via direct brain interfaces with AI systems.

Dirk Kutscher: Principled Approach to Network Programmability

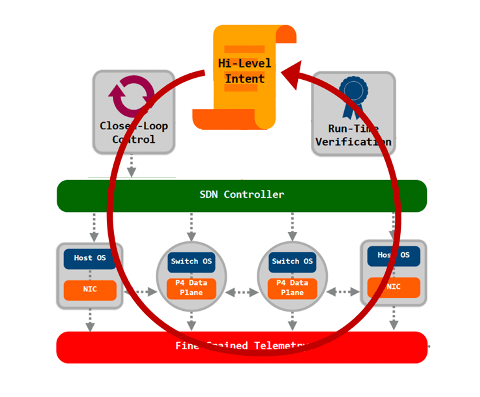

I offered the perspective of introducing a principled approach to programmability that could provide better programmability (for humans and AI), based on more powerful network abstractions.

Previous work in SDN with protocols such as OpenFlow and dataplane programming languages such as P4 have only scratched the surface of what could be possible. OpenFlow was a great first idea, but it was fundamentally constrained by the IP and Ethernet-based abstractions that were built into it. It can be used for programming some applications in that domain, such as firewalls, virtual networking etc., but the idea of continuous innovation has not really materialized.

Similarly, P4 was advertized as an enabler for new levels of dataplane programmability, but even simple systems such as NetCache have to go to quite some extend to achieve minimal functionality for a proof-of-concept. Another P4 problem that is often reported is the hardware heterogeneity so that universal programmability is not really possible. In my opinion, this raises some questions with respect to applicability of current dataplane programming for in-network computing. A good example of a more productive application of P4 is the recent SIGCOMM paper on NetClone that describes as fast, scalable, and dynamic request cloning for microsecond-Scale RPCs. Here P4 is used as an accelerator for programming relatively simple functionality (protocol parsing, forwarding).

This may not be enough for future universal programmability though. During the panel discussion, I drew an analogy to computer programming language. We are not seeing the first programming language and IDEs that are designed from the ground up for better AI. What would that mean for network programmability? What abstractions and APIs would we need?

In my opinion, we would have to take a step back and think about the intended functionality and the required observability for future (automated) network programmability that is really protocol-independent. This would then entail more work on:

- the fundamental forwarding service (informed by hardware constraints);

- the telemetry approach;

- suitable protocol semantics;

- APIs for applications and management; and

- new network emulation & debugging approach (a long the lines of "network digital twin" concepts).

Overall, I am expecting new exiciting research in the direction of principled approaches to network programmability.

Jim Kurose: Open Research Infrastructures and Softwarization

Jim reminded us that the key reason Internet research flourished was the availability of open infrastructure with no incumbent providers initially. The infrastructure was owned by researchers, labs, and universities and allowed for a lot of experimentation.

This open infrastructure has recently been challenged by ossification with the rise of production ISP services at scale, and the emergence of closed ISPs, cellular carriers, hyperscalers operating large portion of the network.

As an example for emerging environments that offer interesting opportunities for experiments and new developments, Jim mentioned 4G/5G private networks, i.e., licensed spectrum created closed ecosystems – but open to researchers, creating opportunities for:

- innovation in private 5G networks such as Citizens Broadband Radio Service (CBRS) that could enables innovation in open, deployed systems and a democratization of 5G+ networks and edge applications;

- testbeds, such as Platforms for Advanced Wireless Research (PAWR); and

- the integration of WiFi, 5G as link-layer edge RANs.

Jim was also suggesting further opportunities in softwarization and programmability, such as (formal) methods for logical correctness and configuration management, as well as programmability to add services beyond the "minimal viable service", such as closed loop automatic control and management.

Finally Jim also mentioned opportunities in emerging new networks such as LEOs, IoT and home networks.

5G: It’s the Network, Stupid

Current 5G network discussion are often focusing on providing more comprehensive and integrated orchestration and management functions in order to improve "end-to-end" managebility and programmability, derived from NGMN and similar requirements. While these are important challenges, this memo takes the perspective that in order to arrive at a more powerful network, it is important to understand the pain points and the reasons for certain design choices of today's networks. Understanding the drivers for traffic management systems, middleboxes, CDNs and other application-layer overlays should be taken as a basis for analyzing 5G uses cases and their requirements. In this memo, I am making the point that many of today's business needs and the ambitious 5G use cases do call for a more powerful data forwarding plane, taking ICN as an example. Features of such a forwarding plane would include better support for heterogeneous networks (access networks and whole network deployments), multi-path communication, in-network storage and implementation of operator policies. This would help to avoid overlay silos and finally simplify network management.

Introduction

5G is the current title for much of the network system work in the telco industry these days. All companies, SDOs and industry fora are now working on 5G technologies. There seems to be a rough consensus on requirements and use cases, and first proposals seem to suggest that the design and the implementation will have something to do with SDN/NFV. In general, the assumptions are that 5G will be faster (thanks to better radio), larger (intended to cover more connected devices due to IoT, smart city, new markets), more flexible (network programmability), and converged (unification of mobile and fixed network access/core).

The implications for the actual system architecture and the way we will communicate in the future are not that clear. Some of the current proposals seems to suggest a network platform that is going to provide significant application support in the network. Other proposals seem to be targeted at trying to apply SDN/NFV to the design.

In this memo, I am making the point that the design of a new system architecture and the formulation of requirements for that should be based on a good understanding of the realities and problems of today's networks. I am claiming that we should use the tools we have now developed like NFV, SDN but also knowledge about efficient transport, content distribution, security to rethink network and system architecture.

I will start with a discussion of pain points in today's networks, before I address popular 5G use cases as proposed by NGMN. I am assessing a few 5G design options and formulate a constructive proposal as conclusion.

Notes:

- The views presented here are my own.

- This is based on a recent presentation I did on this topic. If you are interested, please find the presentation material here: "Security and Transport Performance in 5G".

Today's Networks

The commercial success of today's mobile and fixed networks is clearly based on the success of the Internet and the web. Web applications are hugely popular, especially web-based video video services. It's a bit ironic that these applications are sometimes called Over-the-Top (OTT) applications (from a network operator perspective) -- clearly these are the applications -- there are essentially no other applications that are of interest to users (except for audio telephony which is still treated as a special application).

Current networks are largely leveraging Internet technologies (namely IP) -- however we had to develop a large set of additional gear to make a useful service out of it.

For example, mobility management: Based on the "seamless connectivity" service requirement LTE employs an anchor-point-based mobility approach, implementing through tunneling (either GTP- or proxy-MIP-based). This concept lends itself to a centralized design with the usual inefficiency and scalability problems -- hence people have started inventing technologies like Selected Traffic Offload when they figured out that most users actually just want to access a web resource -- for which seamless IP connectivity is not necessarily required. Current work in the IETF DMM WG and in 3GPP is concerned with generalizing this principle towards decentralized mobility management.

Most extra work needs to be done on the performance side: TCP proxies, traffic management systems, application traffic optimizers, CDNs.

Figure 1 contrasts the theoretic architecture with a more typical implementation.

Figure 1: Mobile Network Performance Enhancing Functions (Copyright 2015 NEC)

The motivation for this extra functionality is as follows:

- TCP proxies are tools for mobile operators for tuning network performance with respect to their requirements. TCP's end-to-end congestion control does not work so well when it has to bridge heterogeneous networks with different causes for delays and packet loss. One of the reasons it does not work so well especially in mobile networks is actually the design of the system as a virtual-circuit-like service: Significant buffering, variable latency, no AQM, no congestion notification. So as a result, you end up with proxies that manipulate the flow/generation of ACKs to trick senders etc. This is typically really helping performance -- otherwise, I hope, these boxes would not be deployed, because they are also creating some problems.[Honda-2011]

- Traffic management systems have a similar motivation: give operators a tool for implementing performance & capacity sharing policies. There are really different implementations of this concept, but in general, these systems typically work like this: a centralized traffic management system collects real-time and long-term load and performance-related data from base stations, routers etc. and uses that to configure policers on gateways, base stations etc. The policies may be flow-specific (e.g., to reduce current congestion contribution of specific flow) or application-type-specific (enforce specific treatment of a group of flows) etc. Surely, it does not sound like a terribly elegant or scalable approach -- but it is done nevertheless because IP itself does not provide sufficient traffic management features itself, so that some of this could be done in-band. Another reason is that without AQM and ECN, such management-based approaches are perceived as the only option to have the network re-act to overload.

- Application-traffic optimizers are mainly video optimizers these days. Their job is caching, pacing, transcoding of video traffic, e.g., youTube. There may be other purposes such as user behavior analytics, statistics etc. These systems are implemented as a transparent chain of traffic classifiers, load balancers and the actual application function. TCP/IP per se does not offer caching on the network/transport layer and explicit HTTP proxies have interoperability problems, so this motivates this implementation approach. Obviously, this will all become more difficult/expensive as more encryption is deployed, e.g., through HTTP/2.

- Network/application server cooperation. An extended variant of traffic management is the Mobile Traffic Throughput Guidance proposal. This is about sharing base station and other relevant information to application servers outside the operator domain to enable applications (video senders) to adapt faster and more proactively. Again, this is done because of a perceived lack of corresponding network/transport layer functionality.

- CDN deployment is ubiquitous these days. No major web service is deployed without it. CDNs are large-scale content distribution/management networks that provide functions such as pro-active distribution, caching, transcoding, filtering etc. There are different CDN providers, and some operators actually own or cooperate closely with CDN providers. A typical deployment is to run CDN nodes close to the operator network, e.g., in a co-location point, although there is a trend to move CDNs deeper into the network. CDNs are essentially like large-scale application-traffic optimizers. But since CDN nodes are normally on the direct path for all user traffic, they require explicit redirection which is done through DNS-based resolution of DNS names to operator (telco or DNS) CDN nodes. But as on-path application traffic optimizers, CDNs have problems with respect to encryption, i.e., they normally cannot intercept TLS communication between a user and a orign server without impersonating that server. The reason that TLS and CDN still works today is that CDN nodes today are configured with their own certificates for a certain domain (e.g., "cdn.example.com") that are linked to a valid trust chain so that users' browsers accept those certificates. While this works, it should be mentioned that this is still problematic from an e2e encryption perspective. The user actually expected an encrypted communication channel between her application and the application server on the orign server, but what she gets is merely an encrypted connection to the next CDN node.

- Transport encryption will proliferate very fast due to the integration of TLS into HTTP/2 and the "always encrypt" policy in major web browsers. It will see a significant uptake once CDNs start deploying it, i.e., also as adapters to legacy HTTP/1.1 servers. As mentioned above, it will render most of the existing traffic management and application traffic optimizers useless or at least make it more expensive to use them. This is creating quite some concerns on the mobile operator side -- which fuels current discussions on if and how the network, user application, and application servers should cooperate for exchanging at least some traffic management information in the presence of ubiquitous encryption (cf. IAB/GSMA MARNEW workshop). Unfortunately, according to some views at least, such management information cannot be (reliably) transferred in an IP or TCP header today, so there is discussion about creating overlay solutions with better support for signaling management and other meta information.

Summarizing, it is not surprising that we need a significant amount of gear in today's network to make them work and perform well: IP forwarding concepts and the whole network architecture were not designed for this scale of commercial deployment, for specific business needs and performance requirements.

Unfortunately, we had to hack the system to some extent to get this functionality integrated: localized congestion control loops require transparent (and brittle) TCP proxies. The lack of in-network visibility of imminent congestion on multiple bottleneck made us resort to management-based approaches, and the lack of network/transport caching as well as the lack for policy-based request forwarding gave us CDN. I did not mention much about problems, but lack of true end-to-end security in the presence of connection-based security and CDN is certainly a big one. The fact that CDN and the DNS-based cache selection is essentially only an overlay over the network shows when we try to do multipath communication in an CDN network. I could go on.

These things are really normal when systems grow over time and people learn what is needed, what did work well, what did not work so well etc. At some point, you have learned enough that you can build a new system.

5G Use Cases and Requirements

The mobile operator industry has been trying to approach the 5G topic by formulating the following use cases in the NGMN 5G White Paper:

- Broadband access in dense areas ("Pervasive Video")

- Broadband access everywhere ("50+ Mbps Everywhere")

- Higher User mobility ("High-Speed Train")

- Massive Internet of Things ("Sensor Networks")

- Extreme real-time communications ("Tactile Internet")

- Lifeline communications ("Natural Disaster")

- Ultra-reliable communications ("E-Health Services")

- Broadcast-like services ("Broadcast Services")

The NGMN White Paper does not claim this list to be exhaustive. I would add Affordable Access as another use case, i.e., along the lines of what is discussed in the Global Access to the Internet for All (GAIA) community.

Also, what is not explicitly mentioned is industry networks (also known as Industry-4.0 in some communities), i.e., the concept to 1) use Internet and virtual networking technology and platforms for factory networks and the like, and 2) to interconnect industry sites. Obviously for both cases, the challenge would be guaranteeing upper latency bounds, reliability -- when running over a multiplexed network infrastructure.

Finally, I would like to add The next use case to the list, i.e., I would like to emphasize the need to keep the network open for future innovations that cannot be planned or imagined today. This has to do with permissionless innovation, avoiding in-network silos, creating a powerful general-purpose platform.

Everyone has their own interpretation when it comes to deriving requirements, but in my view the following can be inferred:

- 5G access will be much more heterogeneous with respect to link layer properties, bandwidth, latency, availability. For example, extremely high frequency communication such as mmWave communication is sometimes mentioned. This would offer super-high throughput and low latency, however only in very small cells. It has interesting challenges, for example, ramping up sending rates in a TCP session with peers on the Internet or managing connectivity for mobile users. Then there are very constrained networks in IoT scenarios, or cheap but low-bandwidth radios in GAIA scenarios. Finding a good network abstraction for all these different kinds of networks seems to be an interesting challenge.

- Use cases such as broadband access everywhere and tactile Internet require a super low latency -- especially the latter would not tolerate full path delay, so would need some local communication possibilities (e.g., through caching or edge computing).

- Related to the increased heterogeneity, I also predict that mobile devices would have more simultaneous access options, i.e., they'd be able to select interface or how to use them in parallel depending on performance requirements and cost constraints.

- Lifeline communication e.g., in disaster scenarios would call for a network that is able to provide useful services in the presence of fragmentation, loss of core network connectivity etc. The GreenICN project has investigated this intensively. Clearly, centralized control and gateways would not lend themselves to such scenarios.

5G Design Options

In the current design discussions I am aware of, there are few ideas that come up frequently:

- Data/control plane split through SDN: this is essentially the idea to design switch capabilities and a programmable interface for enabling controllers to program GTP processing behavior. It's a straightforward idea for generalizing PDN-GW platforms, but it's clearly orthogonal to the requirements listed above.

- Simplified mobile core: Accepting the fact that not all mobile applications would need perfect mobility management and seamless connectivity, one idea is to simplify the architecture in a way that it provides a layered service stack, i.e., with a minimal baseline layer that is less complicated and less costly to operate. This could actually help with performance improvement goals.

- Sliced network architecture: Potentially based on the simplified mobile network idea, there are also proposals to apply virtualization to the mobile network and to offer separate slices. There are two variants to this: Multitenancy for MVNOs and Quality of Service Slicing. Multitenancy for MVNOs is relatively straightforward and is essentially about allowing MVNOs deeper access to a physical network operator's network through virtualizing most core and access network functions, including base stations.

- Quality of Service Slicing: another view on slicing is to offer individual Quality-of-Service slices (like the not so frequently used QCI classes in UMTS and LTE). For example, there would be the best-effort slice, the interactive multi-media slide, the IoT slice etc. It's really like mapping traditional QoS to virtual network concepts -- with similar problems: how would an operator know which slice configurations will be needed in the future? How would an applicaton or a user select slices? How would such a system correspond to network neutrality requirements -- how would it maintain the permission-less innovation feature of the Internet?

- (Deep) In-network caching and computing: For use cases such as "Tactile Internet", but also for more profane applications such as IoT gateways and caching in the access network, there are many ideas for moving such functions deeper into the network. Industry initiatives such as Mobile Edge Computing are pursuing this in a limited fashion today.Technically, this would be about managing IaaS and about shifting function containers to the right place in the network. More future-looking proposals are assuming arbitrary application layer compute functions in arbitrary places in the network. There are different motivations by different players: Network operators see this as an opportunity to "create value" for their networks, i.e., offering platforms that can host such functions. CDN providers see this is an opportunity to extend their platform, both in terms of reach as well as functionality (Akamai). If you extend a CDN massively you could effectively run an overlay multicast distribution network. Again, the shortcoming of the underlying network and transport layer are motivating factors for doing this as an overlay.

- Network service programmability and orchestration: Extending the in-network compute concept, you could also envision a distributed programmable platform that would offer more flexible programmability than just pushing containers to specified locations. For example, a next-generation Mobile-TV provider could operate a multicast-overlay in an operator network, with functions chains for caching, transcoding etc. The distribution, run-time management etc. would then be subject to an application-independent orchestration function. This idea is also motivated by the "value creation" proposition, i.e., network operators would provide the platform and orchestration functions to application service developers/providers. (cf. SONATA project).

Silos in the Network

The last two approaches raise interesting questions as to how manageable this approach would be in the end. There are most likely many CDN providers who would want to run their functions deeper in the network. Then there are also specific applications that require some caching but would not want to use external CDNs platforms, for example video-on-demand services. As a result, you could end up with a collection of silos, each with their specific overlay as depicted in figure 2.

Figure 2: Overlay Silos (Copyright 2015 NEC)

The "deep silo" approach is also motivated by the connection-based communication and security model. Because it is not really possible to share data (while maintaining security properties such as access control rights, authenticity) in the network, we tend to build silos that are centered around the model of enabling a connection to a named server.

There is a particular risk associated with the "deep silo" approach and security. Assume a large number of virtualized CDN nodes, each of those maintaining certificates and public keys for the overall CDN service. Hardening these platforms so that none of these would eventually leak seems to be a major objective. In general, running services on massively distributed software functions deep in the network has risks like this -- which makes the overall approach appear questionable in my opinion.

Centralized Control and Orchestration

The orchestration topic highlights a particular problem: the existing shortcomings of the network infrastructure with respect to its forwarding and self-management capabilities already require a worrying collection of management functions as explained in section "Today's Networks". Instead of empowering the network, removing the need for transparent middleboxes, overlays etc., we might be taking the need for network management to the extreme -- by adding more overlays, more application-layer functions in the network etc.

This is exemplified by misapplying the SDN paradigm towards complete "end-to-end" network control with a network management mindset. Let me explain this: SDN (OpenFlow in particular) was once created as a programmatic interface to enterprise/campus networks that would allow implementing a consistent security policies (isolating nodes on a (virtualized) network). That was motivated by the fact that this is difficult to achieve with the traditional control plane and network management tool set. Also, as mentioned above, IP is really limited with respect to traffic management support, hooks for policy implementation etc.

With OpenFlow, a controller in a local domain is enabled to program forwarding and limited transformation rules into switches in a network so that they could be treated as a virtual switch. This can be done in well-controlled domains (enterprise/campus networks, data centers) and remove the need for some distributed control plane functions and protocols. Since larger parts of mobile networks run in data centers, this is also a valid technology for 5G -- as a tool to implement network control to achieve better network flexibility and policy implementation.

What (in my opinion) does not work so well is to elevate the SDN centralized control paradigm to a mantra for network architecture by applying the centralized control concept to the Internet. For example (slightly exaggerating) creating a powerful centralized controller for controlling base station radio communication, transport network, core network, middleboxes, application servers is likely to create a complex and soon ossified system with a gigantic control overhead. Not only will you have to master the timing issues if you want to achieve fine granular control across layers, you will also have to think about domain-to-domain controller interaction ("east/west interfaces") etc. Anyone remembering "Intelligent Networks"?

Instead, it would be more productive to think about desirable forwarding plane features and proper network abstractions for that -- and then use SDN to control networks in a programmatic fashion, i.e., without fine-grained re-active control and without tying network management & orchestration to network programmability.

Way Forward

So, what do to do about 5G? First of all, it is important to understand that "5G" is not going to be a sudden major fork-lift upgrade of the network. It is actually an innovation effort title, and we are going to see changes in phases.

- Optimizing LTE system implementation through NFV and SDN is happening right now. I would also list "Data/control plane split through SDN" in this category. I would not call this core 5G work -- it would not change the system architecture and interfaces -- but it would be useful in a sense that we improve the infrastructure and explore the potential for more fundamental architecture changes.

- Introduce modern AQM, ECN and transport protocols NOW. A lot of progress has been made in past years (Experimenting with ECN, improving fair queueing and AQM), and it's about time to get these technologies deployed, especially in the presence of ubiquitous encryption, when DPI-based traffic management has less leverage. It's really important to reduce latency further and to enable applications to respond and adapt to congestion faster. One work item here is to get the interworking of IP and link layer protocols correct. In that context, it would also be useful to rethink capacity sharing and traffic management. For example, try to learn from the (experimental) IETF ConEx effort to find ways to combine performance, smarter ways of capacity sharing than traditional TCP fairness, and incentives for applications to cooperate better -- without requiring a complicated traffic management system to enforce this.

- Enable competition and innovation on the network service provider side: This may sound odd first, but in order to move towards 5G, the anticipated use cases, also including GAIA-inspired services, it would be good if it was easier to start new services, not only as virtual services on top of existing networks. In that context the FCC efforts for spectrum sharing between incumbents and new players are interesting.

- Avoid "Intelligent Networks-2.0". It may sound tempting to create super-powerful platforms for in-network services, APIs for service creation etc. to create a more valuable network. There may be even a case for certain applications, for example IoT gateways. But be careful when defining use case and requirements without actually talking to stake holders that are building Internet and Web Services. For example, services like youTube would best benefit from an efficient, low-latency bitpipe -- not from a network service platform. The fundamental risk is that we are building a very elaborate service platform with powerful orchestration etc. that is just too complicated and costly to use, or may impede innovation by enforcing certain communication forms -- so that application service providers would refrain from using it -- and do everything "over-the-top". Or worse, they would start their own network services. If you don't think this is possible, I recommend taking a look at Project Fi, Google's MVNO approach. BTW, this is what happened to Intelligent Networks. Their problem was not that you could not build and operate networks that way -- IN was just too inflexible for innovation, one of the factors that led to the development of SIP-based VoIP "over the top".

- Innovate on the forwarding plane. In order to address the performance requirements, especially considering increased access technology heterogeneity and more flexibility with respect to network deployment options thanks to NFV and SDN, we need a more powerful forwarding plane that enables the network to better deal with local bottlenecks, multipath communication opportunities, in-network storage for local repair, data sharing and rate adaptation. This would enable us to let the network handle many important optimization itself -- without requiring fine-grained control from network management. It would enable us to provide such functions in an efficient, application-independent way -- without creating different silos with similar functionality that is entangled with application-specifics.

Powerful Forwarding Plane

The last point is the motivation for people to look into Information-Centric Networking (ICN) as a 5G forwarding plane. ICN is based on the notion of providing "access to named data" as the fundamental network service. Named data can be packets, Application-Data Units, chunks, or objects. Data is secured (cryptographically bound to a name and/or orign) so that is does not need connection-based security. This facilitates application-independent caching in the network and other functions that are today done in application-specific silos.

ICN routers have better visibility of performance because they can measure interface/path performance in correlation with requests names -- for every hop where this is needed. This enables a forwarding plane that is powerful enough to handle challenges such as intermittent connectivity, multiple local bottlenecks, varying path performance -- without adding too much complexity. Operators can configure different, powerful, forwarding strategies on individual routers, which is the key to support the different 5G use cases and heterogeneous access networks.

Especially for 5G, ICN would make mobility management much easier -- in a way that it would not need the current anchor-based mobility management schemes. For example, requestor hand-over is just a matter of (re-) requesting named data on new network attachment points. ICN forwarding strategies and in-network caching would make this as seamless as today's managed mobility.

There are different specific ideas on how to make use of ICN in 5G (e.g. Cisco's). There are also other benefits such as having a unfied communication abstraction for both the mobile network part of 5G and IoT networks that would be better discussed in a separate posting. The important notion is as follows:

- We have learned much about required functionality to make the Internet useful for diverse sets of commercial and non-commercial applications. For many of those we had to revert to application-layer overlays and elaborated network management support. With that knowledge we can now redesign the interplay of network layer, transport layer, and application as well as network management to build better networks.

- The key question to me is to find a suitable network and forwarding plane abstraction, i.e., to define the capabilities of nodes in the network and find a good function split between forwarding plane and SDN control and network management (the latter two are two different things). The general approach for simplification should be to only do things in network management that you cannot do on the network layer. ICN is just an example of how to do design such a function split -- and it illustrates the benefits.

- The named data approach is a better fit to modern communication requirements. It provides object security, enables data consumption independent of the current source of the bits, which is turn a prerequisite of in-network caching, device-to-device communication and delay-tolerant communication, all of which is deemed critical for 5G. We have moved from physical circuits to TCP connections -- it's now time to go one step further from telephony towards networked computing.

You might ask what this has to do with SDN and NFV. As mentioned above SDN and NFV are really network implementation approaches and infrastructure operation improvements. NFV is obviously an enabler for innovation in a sense as it enables and automates the deployment of software in the network, including ICN functions. ICN could very well be implemented with SDN.

In fact, ICN may actually enable an interesting evolution of today's OpenFlow model. In SDN for IP (take OpenFlow as an example), you have to deal with the fact that endpoint identity and next-hop forwarding information is entangled in IP addresses. Consequently, SDN applications typically implement the desired forwarding behavior through header rewriting in order to interwork with existing infrastructure (and to encode additional information in packet headers). Software-Defined ICN would rather have to do with programming Forwarding Information Bases, configuring forwarding strategies and caching policies -- so a more pro-active, actual programming-like approach. IP SDN and ICN SDN could well coexists, for example in separate slices in a shared infrastructure.

Again, the important notion for 5G is to emphasize networking capabilities and abstraction -- with a focus on performance, application-independence and openness to innovation. The question is not so much whether we should do that or not -- but rather who is going to do it. Cisco’s Paul Mankiewich, SP Mobility CTO, has expressed this as follows:

If the network operator industry fails to create an ICN-like architecture then someone like Google will and they will put it behind the SP's IP transport network.

In fact Google has many ingredients for that already: Project Fi as virtual bitpipe across service providers' networks, QUIC as a vehicle for redesigning transport and application layer protocols, Google CDN and the whole Google cloud as the infrastructure platform.

In that sense, it might not be too unreasonable to say that those who refuse to learn from the history of Intelligent Networks are doomed to repeat it.